NVIDIA Research AI Can Learn New Things With Fewer Training Data

AI and machine learning have become hot topics in tech and even mainstream news these days but most take for granted the work these computers do behind the scenes. Their amazing feats require poring through tens of thousands of related data at a speed only computers are capable of. That, in turn, implies that there are tens of thousands of data for the AI to learn, which may not always be the case. NVIDIA's Research arm is now boasting of a milestone in its Generative Adversarial Network or GAN that allows an AI to learn even when presented with a significantly smaller data set.

Although it had "adversarial" in its name, the GAN AI model actually uses two cooperating networks. For example, a generator creates images while a discriminator compares that with reference images to rate whether they match the style, object, or content. For this to work, the discriminator is usually fed training images ranging from 50,000 to 100,000 as anything less would create a discriminator that simply memorizes the references and is unable to, well, discriminate a synthesized image.

This phenomenon, called overfitting, can partially be addressed by what is called data augmentation which simply involves randomly rotating, resizing, cropping, or flipping images to expand the number of references. That, however, creates a generator that learns to mimic the distorted images rather than learning how to properly synthesize styles and themes.

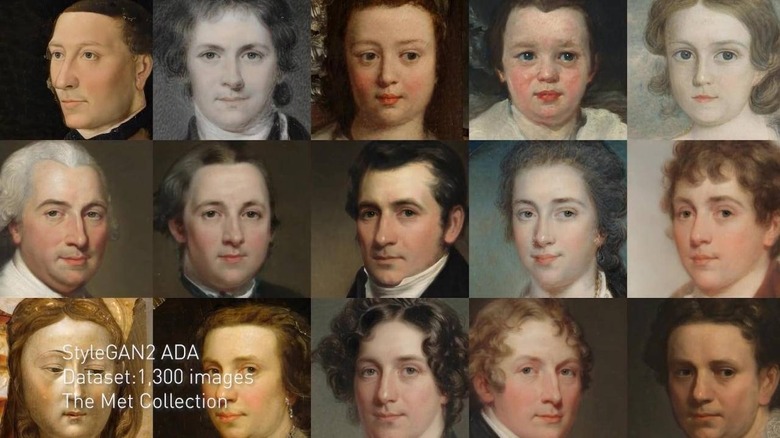

NVIDIA Research's Adaptive Data Augmentation or ADA tries to address both problems by spreading out the data augmentation across different data points. The researchers claim that this allowed them to create a new StyleGAN2 model that is able to learn artistic styles with a training data set that is 10 to 20 times smaller than what a traditional GAN would have required.

The applications of NVIDIA Research's new AI goes beyond just synthesizing art styles for the sake of filters, something that Adobe will most likely be interested in to augment Photoshop's Neural Filters that is already based on the first-gen StyleGAN. This AI will also be able to learn from medical images where scans or samples are far too small to be effective like new kinds of diseases or disorders.