MIT Researchers Try And Teach Machines To Reason About What They See

Humans can use reasoning without having to think about it. An example would be telling a child to describe a pink elephant. The child would be able to do so despite never seeing a pink elephant. Computers aren't so good at this sort of reasoning because they learn from data. MIT scientists are trying to give machines the ability to reason about what they see.AI researchers are using abstract or symbolic program. This sort of AI can wire in the rules that allow the interpretation of what the machine sees and then use the comparison about objects to determine how entities relate.

A symbolic AI uses less data and records the chain of steps taken to reach a decision. Scientists say that when a symbolic AI is combined with the processing power of a statistical neural network, it can beat humans at image comprehension tests.

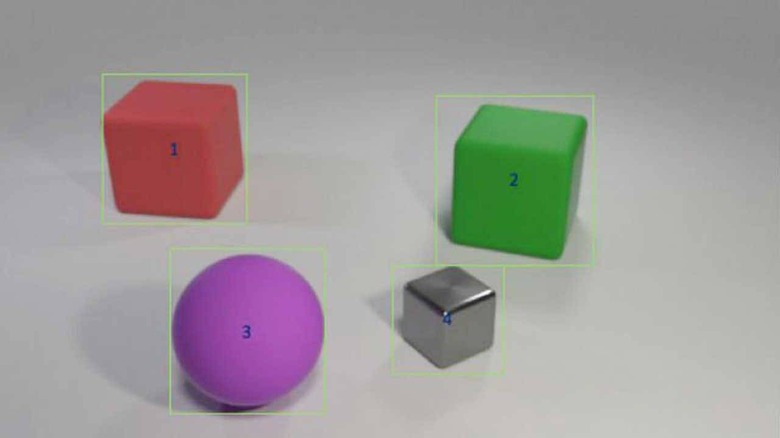

Using a hybrid statistical and symbolic AI, the MIT team has shown that the AI can learn object-related concepts, like color, and use that knowledge to interpret complex relationships in a scene. The hybrid AI was able to answer complex questions about a scene.

The team could ask things like, "How many objects are both right of the green cylinder and have the same material as the small blue ball?" The AI was able to give an answer, and when unable to provide a solution, the model was updated. The researchers say that the hybrid AI outperformed its peers with a fraction of the data the other AIs possessed. The other models were trained on CLEVR dataset with 70,000 images and 700,000 questions whereas the hybrid AI used 5,000 images and 100,000 questions.