Interactive Dynamic Video Could Improve AR And Eliminate CGI Green Screen

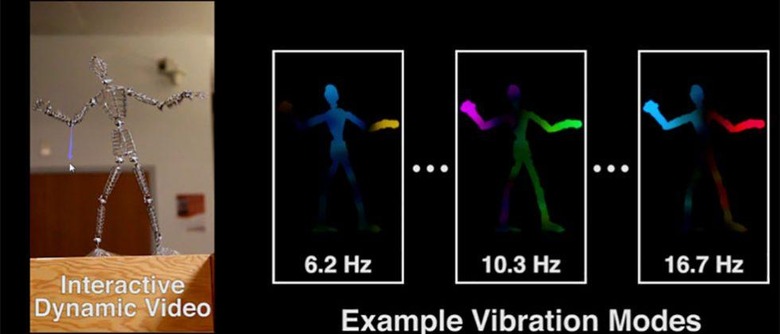

MIT researchers from the Computer Science and AI Laboratory are performing research into a new imaging technique called Interactive Dynamic Video or IDV. IDV allows users to reach in and touch objects that they are seeing in a video and could hold great promise for improving augmented reality. IDV uses traditional cameras and algorithms and looks at the tiny, nearly invisible vibrations that an object produces to build video simulations that users can interact with in a virtual environment.

"This technique lets us capture the physical behavior of objects, which gives us a way to play with them in virtual space," says CSAIL PhD student Abe Davis, who will be publishing the work this month for his final dissertation. "By making videos interactive, we can predict how objects will respond to unknown forces and explore new ways to engage with videos."

Davis believes that the new technique might allow filmmakers to produce new visual effects and could have far ranging impact for other fields including allowing architects to determine if buildings are structurally sound. In a virtual world, the technique could give virtual objects in a game the ability to interact with and bounce off objects in the real world. Imagine a Pokemon Go creature bouncing off the leaves of a bush nearby.

The technique uses vibration modes at different frequencies that represent distinct ways that the object can move. Once these mode shapes are found the researchers are able to predict how the object can move.

"Computer graphics allows us to use 3-D models to build interactive simulations, but the techniques can be complicated," says Doug James, a professor of computer science at Stanford University who was not involved in the research. "Davis and his colleagues have provided a simple and clever way to extract a useful dynamics model from very tiny vibrations in video, and shown how to use it to animate an image."

SOURCE: MIT