Inside Qualcomm's big C-V2X plans for tomorrow's smart cars

I've always felt nervous when using adaptive cruise control (ACC) on a busy highway. ACC has always left too much of a gap between my car and the lead car: even setting ACC for one car length, the minimum most automakers allow, I felt the gap would allow someone to sneak in and I was always unsure of how the system would react. But what if your car already knew that someone was thinking about sneaking into that gap before you even realized it?

Enter Qualcomm Technologies' Cellular Vehicle-to-Everything (C-V2X) solution based on 3rd Generation Partnership Project (3GPP) Release 14 specifications for direct communications, utilizing the Qualcomm 9150 C-V2X chipset. Release 14 focuses on LTE Mission Critical enhancements, LTE support for V2x services, eLAA, 4 band Carrier Aggregation, inter-band Carrier Aggregation and more.

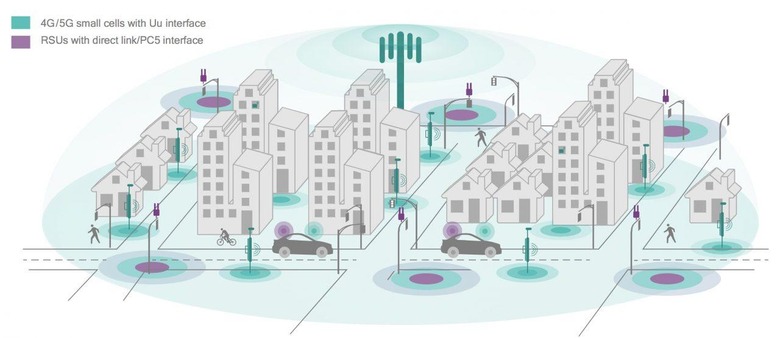

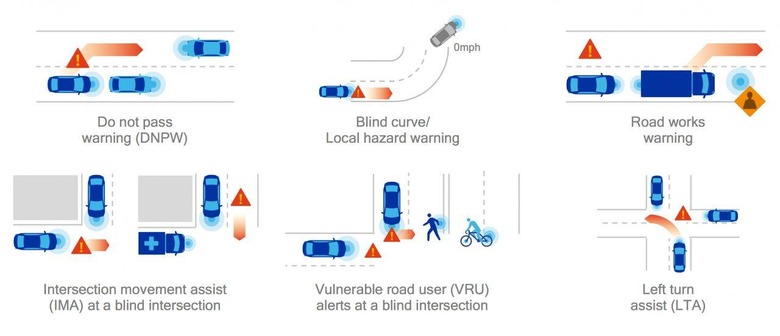

C-V2X works in two transmission modes, direct and network-based. Both promise critical safety data for autonomous driving, either directly between cars or via an overarching cloud network. It's not designed to replace, but will instead complement existing Advanced Driver Assistance Systems sensors, such as cameras, radar, and LIDAR, to provide information about the car's surroundings, including non-line-of-sight scenarios to other vehicles on the road. Creating a safety network of millions of vehicles working together to make driving safer

When communicating directly between vehicles, Qualcomm's C-V2X uses direct low-latency 5.9 GHz transmissions which transmit data in real time at a very high rate of speed. This removes the dependency on the cellular network and the added latency with having to send and receive data via the cellular network.

However, there's also cellular support which allows for the transmission of data to the other out of sight vehicles on the road. That means it would be possible to alert other autonomous vehicles within miles of your current position of your intentions, such as your speed and current destination. In addition to the communications C-V2X opens the door for 5G unified connectivity, 3D mapping with precise positioning (down to the exact travel lane) and onboard intelligence.

Although Qualcomm has been talking about technology like this for some time, C-V2X is not just a concept. The company has partnered with many of the world's top automakers and Tier-1 providers to start introducing this system into cars within the next two years.

At IAA, I sat down with Patrick Little, SVP & GM of Qualcomm Automotive to get an update on the future of communications, compute, and autonomy. While Qualcomm may be best known for its smartphone chips, the company has been quietly working in the background on processors and connectivity for the automotive industry. Qualcomm has had a relationship with Audi around connectivity for many years now, for instance, starting with the 3G MMI systems and providing in-car cellular and Wi-Fi hotspot connectivity.The company sees C-V2X as building on top of that, and those early partnerships.

Though it's not the only chipmaker looking at the space, Qualcomm is confident it will quickly outpace the current market leaders. Little doesn't see the current technology being leap frogged and that we will see more of a progression into Level 3 and Level 4 autonomy. When asked about Nvidia's current role in the marketplace he acknowledged that "Nvidia has attacked the hearts and minds of the industry early." Still, he argues that their rival's current compute systems are more of a proof of concept still, and are not elegant enough to be fully commercialized.

Part of that elegance comes down to cooling: the current chipsets are simply not designed to dissipate the sort of heat byproduct that comes with more intensive automotive processing. With water cooling solutions, there is a performance and power tradeoff. When it comes to electric vehicles and preserving range, having to divert some of the electricity to power and cool the compute systems is a problem."If you burn too much power on autonomy then you will reduce the range," Little points out. "We need to be very discerning about our workloads."

Little sees the Qualcomm's entry point into the market starting at refining Level 3 autonomous driving. There's a lot of work to do still around silicon design and extracting the most performance, not least delivering on the argument that shouldn't need complex cooling systems. Qualcomm is counting on Snapdragon being up for the task, with machine learning on DSPs combined with NPU (Neural Processing Unit) substantially reducing the power consumption.

As will being smart about how you distribute your available energy. Just like at home, phantom power draw is a problem for EVs. Switching off the power to a motor or device drawing current that is not necessary will help extend driving range. The ability to completely switch these components off is something that has already been developed, and is in use in other Qualcomm systems.

The Cellular-V2X non-line-of-sight solution will be part of an "electronic envelope that will save a tremendous about of lives with car to car, car to infrastructure, and car to pedestrian leading the way." Little explained. Starting with the drive data platform and sensor fusion platform inputs from GNSS, inertial sensors, and cameras present an accurate picture with 3D HD maps. This data is then shared with the network of cars and infrastructure.

It's the missing piece in full autonomy. The data sharing will help realize the full potential of the current autonomous driving platforms. Cars that are 2 miles or 20 miles away will know you are sitting in traffic, along with all the other data that you have collected.

The challenge is finding what C-V2X building blocks will scale commercially and efficiently. Who will build the new infrastructure? Cities and towns often struggle with maintaining their existing roads, highways, and bridges. The current thought is to work within the current ecosystem of mobile network operators. "Can we create a network that will help get the infrastructure established quickly?" That's the billion-dollar question right now. Little sees an opportunity for whoever installs the infrastructure, but the providers need to see a business case to justify the spend. The hope is that the evolving the mobile network will open up new business models, with the biggest markets expected to be in North America and China. In rural areas without an advanced mobile network and V2X infrastructure, the fallback will be direct car-to-car and car-to-pedestrian communication.

The standard bodies around 3rd Generation Partnership Project (3GPP) will evolve up to release 16. Cellular Vehicle-to-Everything (C-V2X) has significant advantages over 801.11p / dedicated short range communication (DSRC). Unlike the 802.11p technology on which DSRC is based, C-V2X will take advantage of 5G wireless technology. This builds upon the investment being made by global mobile network operators that can expand to include a V2I network, in partnership with roadway operators, as a part of existing IoT investments in industrial, smart cities, and smart transportation infrastructure.

Little and I also discussed the future of autonomy. There are lots of ways to cooperate and build proper standards with the governing bodies and regulatory committees, he suggested, and it will take a significant combined effort to move the industry forward. Everyone needs to come to the table to establish these standards, Little says, not only to recognize why machines could be better drivers than humans, but also to settle the not-insignificant moral questions around self-driving vehicles.

Typically, when driving all six senses are necessary, however, at the most, you can dedicate 2.5. "Machines will always win in the sense category" Little argues. "The sensors will always be better than the human eyes and ears." Sensors are dedicated to their job and are not very easily distracted; there's no hesitation, and the car will be able to deploy the brakes automatically once the AI makes the decision. There's no second-guessing.

The future also involves human-style thinking, though: can we train a machine to think us out, and do we really want to do that? While the technology may be advanced, we're still unclear on how you train an autonomous car to make a moral judgement. "What if the car needs to make a decision to hit a pedestrian or crash into a cafe full of people," Little asks. Right now there's no consensus, and the current sense from the industry is that we're potentially 20-25 years away from solving this part of the equation.

"[In] the transition from Level 3 to Level 4 and 5, as the car starts to make decisions ... there will be a temporal chasm," Little argues, "and we as humans need to become comfortable enough with the algorithms and their outcomes to make the transition possible." For the moment, the more immediate direction is a focus on refining Level 3. After that comes a whole new conversation, and not just one between cars.