Google Lens In Google Assistant: Here's What It Can Do

Google has always boasted about its abilities to churn numbers and data into something meaningful, from the earliest days of personalized search results to the variety of applications of Google Assistant today. It has also been working hard on the field of computer vision, as seen in its impressive Pixel cameras and Google Lens. Now it is bringing those two together, not in a single app, but in a combination that harnesses the power of Google Assistant, seen through the eyes of Google Lens.

Those who have been following Google's many projects, many of which never saw the light of day, will probably see Google Goggles' DNA in Google Lens. Simply put, Google Lens "sees" a real-world object, uses computer vision and machine learning to identify what it is, and then taps into Google's repository of knowledge to surface information about it.

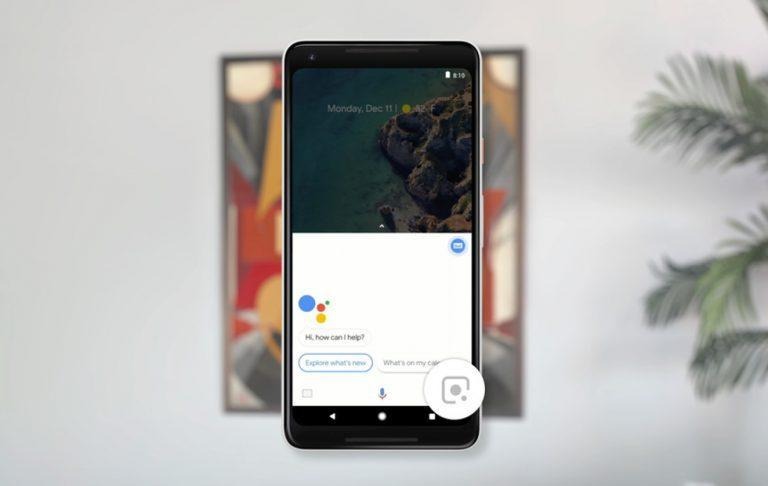

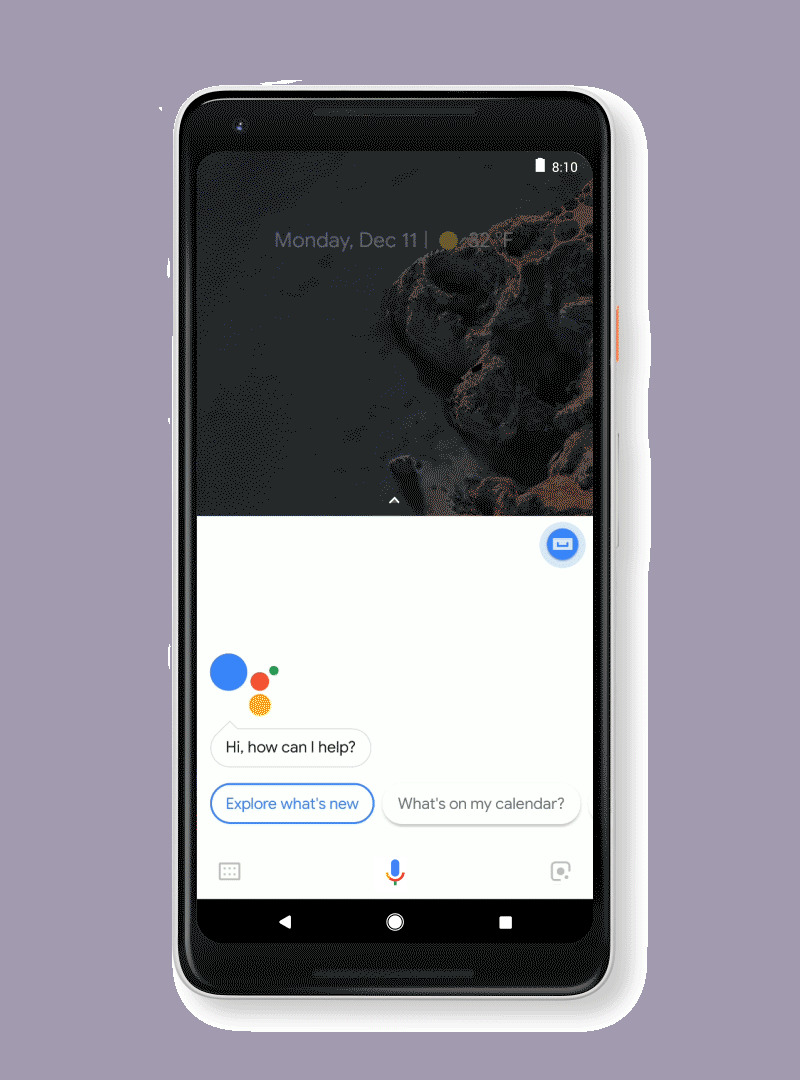

All of that is now available to Pixel and Pixel 2 owners from a single tap of an icon in Google Assistant. You might wonder, why bring the two together? While Google Lens might be great at identifying what an object or item is, it doesn't exactly help you do much with it. That's where Google Assistant comes in.

Say you pull up Google Assistant and train your Pixel's camera on a business card or barcode. After Google Lens identifies the text, links, code in the image, Google Assistant can help you create a new contact information for it or shop for the product online. Google Assistant can also pull up relevant information about landmarks identified by Google Lens, or even an artist's biography just my "looking" at his or her work in a museum.

Google Lens in Google Assistant is rolling out to all Pixel phones in the US, the UK, Australia, Canada, India, and Singapore, but those phones need to be set to English. Google Lens is also available in Google Photos so you don't have to fret if you missed the moment, as long as you didn't miss taking a picture.

SOURCE: Google