Fake Obama Lipsync Has Terrifying Implications For Video Evidence

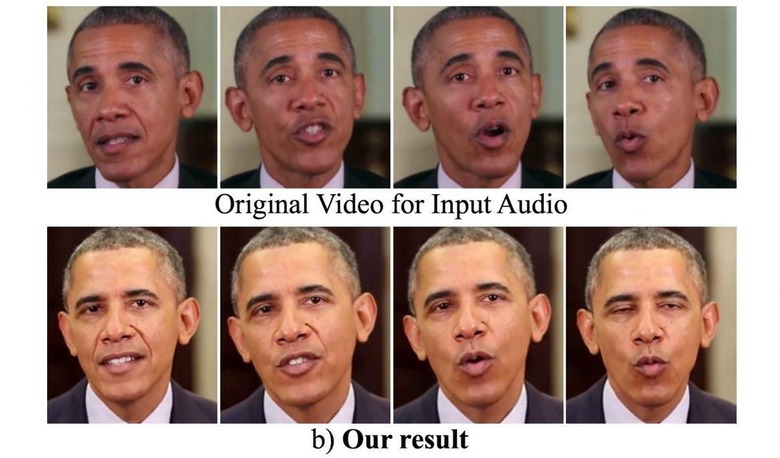

"The camera never lies" may not have ever been especially true, but new research into lip-sync for computer-generated mouths could one day leave all videos suspect. The research, by a group at the University of Washington, details how by training a neural network it can be used to generate a computer-controlled mouth to map onto a chunk of real footage. In their example, presented at SIGGRAPH 2017, a section from one of President Obama's speeches was used to create a totally new video of him apparently speaking it.

"Trained on many hours of his weekly address footage, a recurrent neural network learns the mapping from raw audio features to mouth shapes," researchers Supasorn Suwajanakorn, Steven M. Seitz, and Ira Kemelmacher-Shlizerman explain. "Given the mouth shape at each time instant, we synthesize high quality mouth texture, and composite it with proper 3D pose matching to change what he appears to be saying in a target video to match the input audio track. Our approach produces photorealistic results."

Now, it's worth noting that the current output is still slightly "off" when you watch it. Certainly, it wouldn't be too difficult for someone to spot that it was not actually Obama speaking. However the implications as the technology is advanced are considerable, particularly in a fast-paced cable news environment where viewers can be quick to believe what they're told is real.

The system works by first figuring out a rough mouth shape for the different sounds in an audio file. From that, a more realistic mouth texture can be rendered, and that is then overlaid onto a target video. Finally, the two are rematched and re-timed, so that the movements of the mouth follow those of the "donor" head.

It's a similar approach to prior research, the trio notes, but differs in a few important ways. Earlier attempts at faking videos in this way have focused on transplanting video of lip movement onto another video. In contrast, this technique creates an entirely new, virtual mouth.

While there are clearly some concerning possibilities for eventually putting fake words into the mouths of people who never spoke them, Suwajanakorn, Seitz, and Kemelmacher-Shlizerman argue that there are useful applications too. "The ability to generate high quality video from audio could significantly reduce the amount of bandwidth needed in video coding/transmission (which makes up a large percentage of current internet bandwidth)" they point out. "For hearing-impaired people, video synthesis could enable lip-reading from over-the-phone audio. And digital humans are central to entertainment applications like film special effects and games."

The future, they suggest, could involve modeling anybody's typical facial movements through a video source, such as analyzing Skype videos of them. How practical that might be in the real-world remains to be seen, but if you needed re-convincing not to always believe your eyes, this is probably the research to do it.