Google Just Introduced Multisearch - Here's Why You Should Care

Google today announced that it's rolling out a new multisearch feature geared toward helping with those particularly tricky searches. While using search can usually be a straightforward process, that isn't always true, especially when you're trying to find something like a piece of clothing in a different color or a new piece of furniture that matches what you already have in your house. With multisearch, Google is looking to use AI to make those searches easier (or at least more fruitful).

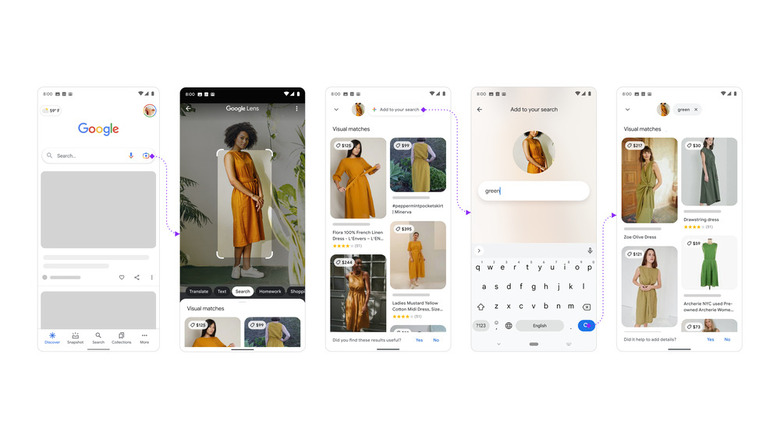

Multisearch is a new feature rolling out for Google Lens, which itself is found in the Google app in Android and iOS. It uses text and images at the same time to help users make their queries more specific and narrow down searches that would be too vague using just text or images alone. It sounds like it should offer solid functionality, though it could be one of those features that's going to get better over time since it's based on AI.

How to use multisearch with Google Lens

To use multisearch, you'll first need an image of something you want to search for. This can be a screenshot or a photo, according to a new blog post on The Keyword. Once you've picked the image you want to use, swipe up and then tap "+Add to your search." From there, you can add text to further refine your search. Google says that multisearch allows users to find out more about items they encounter in the wild or refine searches "by color, brand or a visual attribute." Some of the examples Google gives make a fairly compelling case for the function. For instance, one scenario involves taking a picture of an orange dress and using multisearch to find that same garment in green, or using a picture of a plant to look up care instructions for it — we imagine multisearch might also be helpful for identifying plants in the first place.

While multisearch could be a useful feature, its functionality may be somewhat limited at the start. For now, it's just rolling out in beta in the United States with support only for English, which means most of the world won't be able to tap into this technology yet. Google also says that multisearch works best for shopping-related searches, though with AI backing it, it probably won't be long before the feature is up and running for all kinds of searches. The company is looking into how its Multitask Unified Model — which allows users people to perform very specific searches through a combination of images and questions — could work with multisearch in the future, so we'll stay tuned for more details on that front.