Tesla's Dojo Supercomputer Begins Production To Train Autopilot Worthy Of Its Name

Tesla has just released its earnings report for its second fiscal quarter of 2023, and aside from financial figures, it revealed one crucial piece of information: its Dojo supercomputer has now entered production. The highly ambitious computing machine will go toward training and improving the automaker's Autopilot driver assistance system. "The better the neural net training capacity, the greater the opportunity for our Autopilot team to iterate on new solutions," the company says.

The supercomputer will play a crucial part in neural network training and processing real-world datasets collected from Tesla cars as well as those gleaned from simulation models to develop the Autopilot system. While the news of Dojo starting production is definitely a major achievement for Tesla, the company has some truly ambitious goals.

Tesla's internal projections mention that Dojo will be among the world's top five most powerful supercomputers before 2024's first quarter. Based on estimates, by October 2024, it will hit 100 Exa-FLOPS of processing power. Right now, the world's fastest supercomputer is HPE's Frontier, which serves a raw compute power worth 1.194 Exa-FLOPS. At the second spot is Riken Center for Computational Science's Fugaku, which taps out at 442.01 petaflops. HPE's LUMI takes the third position.

How is Dojo going to assist Tesla?

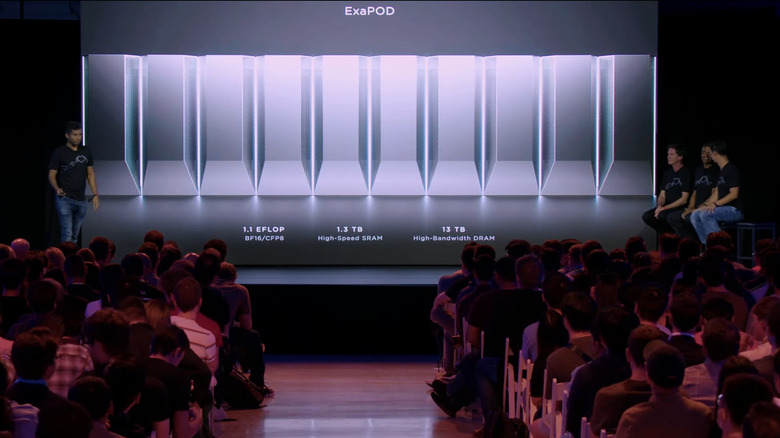

At the moment, Tesla relies on a supercomputer consisting of Nvidia A100 GPUs, which consists of 5,760 GPU units arranged across 820 nodes, capable of churning out 1.8 Exa-FLOPS. However, the Dojo can leapfrog those figures easily. Tesla has previously claimed that only a few Dojo cabinets can perform the same kind of automatic labeling work as thousands of GPUs clustered together. But instead of outsourced cores, Dojo relies on a self-designed D1 chip that is manufactured by TSMC based on its 7nm fabrication process. Rather than utilizing centralized server-center-inspired architecture, Dojo will be a scalable machine that would ultimately achieve the form called ExaPODs.

Each D1 chip contains over 300 computing cores, while the D1 chips themselves are clustered to form tiles. A collection of six tiles would constitute a single System Tray, while a pair of System Trays would occupy one cabinet. Tesla's engineers would then combine 10 such cabinets to form one ExaPOD. Tesla offered a detailed look at Dojo at its AI Day event last year.

Dojo aims to significantly accelerate the auto-labeling process that goes into training the Autopilot model, allowing it to more accurately recognize real-world objects and comprehend situations so that it can accordingly make the right decision. But even during the early test phase, Dojo proved to be so powerful that it reportedly overloaded the local power grid.