Researchers team with Intel to make super-sensitive artificial skin

The field of robotics is one that's made some significant strides in recent years, but one major difference between us and our robotic counterparts is our ability to feel. Simply having the dexterity to carry out a task isn't always enough, as our sense of touch gives the brain a lot of contextual information. Today, researchers with the National University of Singapore (NUS) have demonstrated new tools that will allow robots to sense touch, potentially opening the window for them to carry out a larger array of tasks.

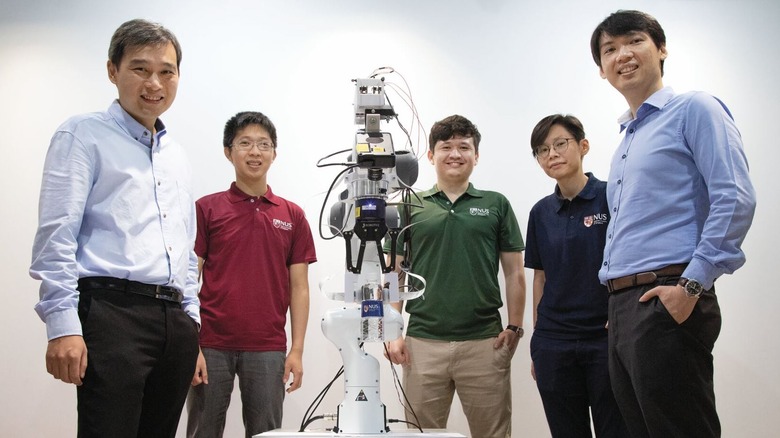

Today's announcement has been a long time in the making. Last year, the NUS Materials Science and Engineering team, led by Assistant Professor Benjamin Tee, detailed the development of an artificial skin sensor meant to give robots and prosthetic a sense of touch. As Tee explains in today's announcement, developing that artificial skin only got the team part way to achieving its goal of actually using the skin to bolster a robot's capabilities.

"Making an ultra-fast artificial skin sensor solves about half the puzzle of making robots smarter," Tee said. "They also need an artificial brain that can ultimately achieve perception and learning as another critical piece in the puzzle."

In this case, the brain is Intel's Loihi neuromorphic computing chip. Loihi uses a spiking neural network to process the touch data gathered by the artificial skin and vision data gathered by an event-based camera. In one test, NUS researchers had a robotic hand "read" braille letters, then passing the tactile information to Loihi through the cloud. Loihi was able to classify those letters with "over 92 percent accuracy," and it did it while "using 20 times less power than a standard Von Neumann processor."

In another test, researchers paired the touch system with an event-based camera to have the robot classify "various opaque containers holding different amounts of liquid." Using the tactile and vision data, Loihi was able to identify rotational slip for each container, which NUS researchers say is important for maintaining a stable grip. In the end, researchers found that pairing event-based vision with tactile data from artificial skin and then processing that information with Loihi "enabled 10 percent greater accuracy in object classification compared to a vision-only system."

In every-day life, robots that can feel as well as see could have a lot of applications, whether those are acting as a caregiver to the elderly or the sick or working with new products in a factory. Giving robots the ability to feel could also open the door to automating surgery, which would be a big step forward for robotics.

The National University of Singapore's findings were published in a paper titled "Event-Driven Visual-Tactile Sensing and Learning for Robots" for the 2020 Robotics Science and Systems conference; you can watch the spotlight talk about this research submitted to the conference in the video embedded above.