Google Assistant Jesus Controversy Gets Official Explanation

Google is facing a minor controversy over Google Assistant and its inability to tell users who the religious figure Jesus is. The "issue" caught public attention via a Facebook Live video in which user David Sams accuses Google Home of "refusing to acknowledge Jesus, Jesus Christ, or God." However, Google Assistant does provide info on Buddha and Muhammad, causing some to accuse Google of having an agenda.

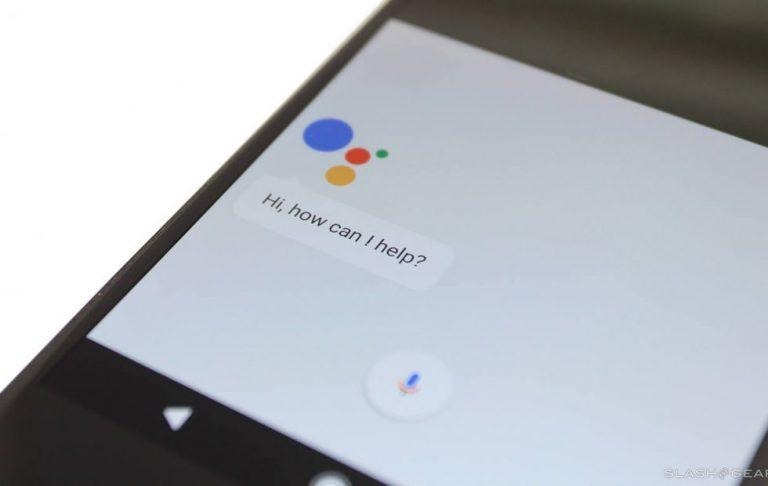

Google Home is Google's smart speaker similar to Amazon Echo, which also makes an appearance in Sams' video. When Google Home is asked, "Who is Jesus?" Assistant responds with, "Sorry, I don't know how to help with that yet." Google Assistant provides the same response when asked the more generic question, "Who is God?"

However, when asked who Buddha is, Assistant launches into an explanation pulled from Wikipedia. When asked who Muhammad is, Assistant offers a much shorter, simpler answers: "Muhammad was the founder of Islam." The video has started to catch the public's attention, most of whom don't care.

A small minority of Christians have questioned the absence of an explanation for the Jesus question, though, with some fearing that Google is deliberately censoring that response. That is true, sort of, but not for the conspiratorial reasons some people are claiming.

In an official statement via Twitter, Google says the lack of a response is to "ensure respect." Because some Google Assistant replies are pulled from the web (such as Assistant's Wikipedia explanation for the Buddha question), it is at risk of providing a less than accurate answer if an effort is made to deliberately influence the response in an offensive way.

A Google spokesperson said:

[Google Assistant] might not reply in cases where web content is more vulnerable to vandalism and spam," a Google spokesperson said. "If our systems detect such circumstances, the Assistant might not reply. If similar vulnerabilities were detected for other questions — including those about other religious leaders — the Assistant also wouldn't respond. We're exploring different solutions and temporarily disabling these responses for religious figures on the Assistant.

Despite their intelligence, the AI systems to which consumers have access are, in a way, dumb. They depend on humans to teach them, knowing only what they're taught. Online vandalism is an act in which humans deliberately influence these systems to be racist, offensive, or otherwise skewed in an improper way.

We saw this in action back in 2016 with Microsoft's AI chatbot named Tay, which was designed to become smarter the more people "talked" at it via tweets. It didn't take long for some awful people to ruin the fun by tweeting a variety of racist, offensive, and otherwise unwelcome things at the chatbot, which then incorporated those things into its own responses.

To be safe, Google Assistant won't respond to a question if the web content upon which it depends is vulnerable to this sort of vandalism. After all, the controversy would be much bigger if Google Home provided an offensive answer to that question based on a vandalized web page.