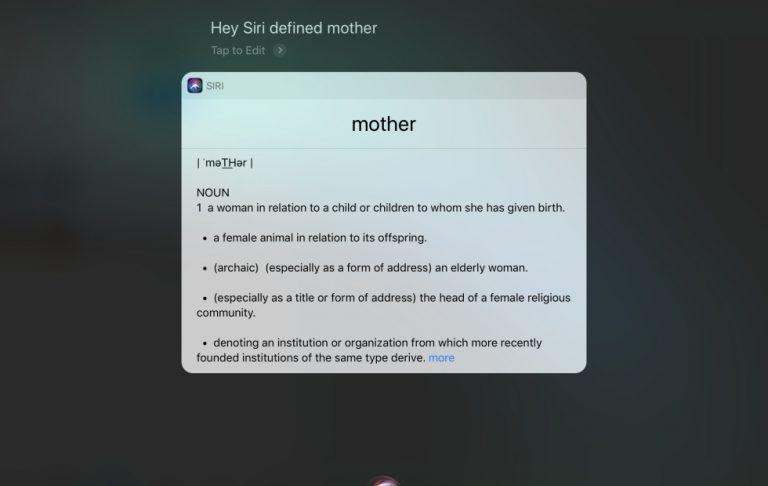

Ask Siri To Define "Mother" Twice And You're In For A Shock

AI-powered voice assistants and their smart speaker abodes are hot items these days. But useful (or not) as they may be, they come with some inherent risks. Driven primarily by voice, there is no quick way for users to screen results and stop the assistants before they blurt out something private, sensitive, or inappropriate. Take, for example, Siri, who will gladly inform you of a definition of the word "mother" that you may not really care for or want to hear.

When you ask Siri to define a word that has multiple meanings, it will repeatedly ask you if you want to hear the next meaning in its search results. In this particular case, the second definition for "mother" is hardly accurate and terribly inappropriate. Before any other sane alternative definition, Siri will tell you that, as a noun, it is short for a mother-related cuss word.

Amusing as it may be, it does surface some of the long-standing flaws of Apple's pioneering personal assistant. For one, it has no concept of a filter and will even read out explicit language from messages without missing a beat. It also doesn't seem to be content with offering just the best and most relevant result, preferring to burden the user with the choice from multiple answers.

What is indeed amusing is how much of the comments in the Reddit thread seem to take issue on how terrible Siri is in pronouncing English words in anything but American English. The expletive from this particular "bug" is even laughable because of Siri's delivery. In some cases, however, it could be more than just distracting, like when Siri gives an unclear pronunciation for a street name, which could cause drivers to double check and take their eyes off the road.