AI Needs To Be More Emotional

Smart personal assistants are the new weapon of choice of tech giants, but, idyllic as they may sound, they are currently a misnomer. Smart? Arguably so. Personal? Not quite. These artificially intelligent assistants might be good at analyzing speech patterns, spoken commands, and connecting to Internet services, but they aren't yet that capable yet when it comes to accurately predicting what we really want, let alone anticipating what we need. But most importantly, these AI lacks a critical element that would make these personal assistants truly relatable, but is also the most difficult human trait to reproduce: emotion.

Emotional Intelligence

Artificial intelligence has mostly concerned itself with things like machine learning, language processing, and similar "hard" aspects of knowledge formation, and rightly so. These functions and features of human intelligence are more easily quantifiable than emotions. They also serve as the foundations of future developments in any form of artificial intelligence.

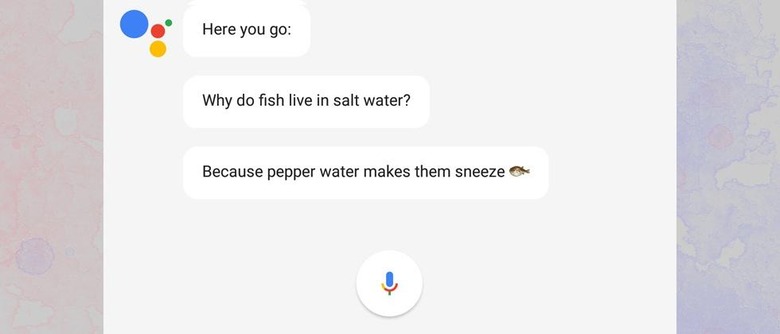

But the AI that is being presented to people today, the personal assistants and chat bots that are being marketed to consumers, try to paint a picture of artificial intelligence that just isn't there yet. They conjure up images of disembodied voices or, in some cases, holographic avatars that understand you, sometimes even better than humans can. In truth, however, all that they can really understand are the words that you say or type, the structure or syntax of the commands you give. They cannot yet, however, understand the intention behind the words. That would require more than just intelligence. It requires a bit of intuition as well, which delves closely to the realm of emotions.

Context is king

AI assistant and bots are already good at taking context into account in their responses, but these contexts are still far too limited. Date and time, location, past queries, usage habits, mostly the things that can be pinned down to numbers and statistics. A truly personal assistant, the flesh and bone kind, however, would also take other contexts into account.

Case in point, AI assistants respond in the exact same way, no matter how you ask, and no matter who asks it. They will respond in the exact same way and mostly with the exact same data whether you ask it or your partner asks it (unless you ask for account/user specific data). While personal assistants are already capable of differentiating your voice from others, they aren't yet equipped with the ability to respond to different users with different results or even different tones.

This is just one of the nuances that Facebook's Mark Zuckerberg is trying to tackle in his own "Jarvis" AI assistant, which he has started almost from scratch and might soon reveal to the public in a bigger way. He is developing his bot to be more aware of contexts beyond the usual set that platform makers give their bots to work on. And in doing so, he is making "Jarvis" sound more human.

Empathizing our needs

Google Now, Cortana, and, sometimes, Siri are advertised as being able to anticipate our needs, but, in truth, all they do is look ahead at our schedules, upcoming flights, or our shopping trends. They aren't exactly aware of what we really need, especially when we ourselves don't know what those needs really are.

We're still years away from the AI that can read our body language and vital stats to divine your mood and, upon plopping down on the couch, play the perfect playlist for you. That also requires the AI to know whether you want to be soothed or you want to be driven to action. And when you tell it to "play something lighter", it will know just what you mean.

An AI such as this will require more than just being able to curate your listening habits and preferences. It needs to also know your personal preferences beyond music, your behavioral patterns, etc. And, it needs to be able to tie those two together. It requires being attuned to the human user's emotional and psychological profile and status. It requires to artificial intelligence unit to also be emotionally intelligent as well.

Putting a face to the voice

But a disembodied voice, no matter how human sounding it is, will still feel creepy. It took years for humans to get used to the idea of a telephone, but even then it only works because you're sure there's an actual, live human being on the other end of the line. A voice-only AI would work only in some contexts, like when you're out and about and only have your phone with you. In most other cases, we humans prefer to see a face, and perhaps also a body, with the voice. And that's where virtual and especially augmented reality come in.

VR and AR experiences can do more than enchant us with near-realistic visuals. They have the potential to grip at our hearts precisely because of the immersion that they offer. They can give these AI assistants a more visible representation, usually humanoid, that makes them more approachable and more acceptable. Uncanny valley aside, such virtual representations can give these AI bots an air of credibility, going beyond amusing but inessential conversations. They make the experience a more emotional one, which can be both good and bad.

Grave emotional distress

Emotion is a powerful tool and, like any other tool, they can be used both for good or for ill. Emotions can be used to develop attachments, loyalties, trust, and confidence. But they can also be used to manipulate, deceive, coerce, or even extort.

Imagine how that can be amplified when experienced in a VR setting, where everything looks and feels real. You don't even have to go down the route of science fiction. Just look at the sometimes amusing but always painful videos of people tripping and falling because of what they perceive to be real in VR. Emotion is a potent element in bringing the experience to life.

But we don't even have to step inside those goggles to see how emotional AI can affect us. Even the slightest semblance of emotion in non-playable characters or NPCs in games can already have a striking effect. What more game AI that truly exhibit the same emotions and intelligence as their chat bot or assistant counterparts.

Wrap-up

The AI assistants we have today are still a far cry from the helpful, almost psychic versions we have in science fiction or even in tech demos. Despite their current marketing as virtual personal assistant, they are still cold, distant, impersonal. A truly effective virtual personal assistant would need to be more than just intelligent. It would need to also be emotional.

That, however, is easier said than done and still remains a distant dream in the realm of artificial intelligence. Fortunately, it seems that computer scientists and the companies that fund them have more or less established secure footing on the basics. Perhaps now is the right time to move to phase two.