What Is Differential Privacy And Why Is Apple So Excited About It?

The unexpected star of iOS 10 may well end up being a barely-known cryptography system to balance privacy and personalization, as Apple further positions itself as the bastion of user data protection. Differential privacy may not be as slick as Siri's increased skill set, or as timely in a cultural sense as new emojis and stickers, but it's arguably far more important than either.

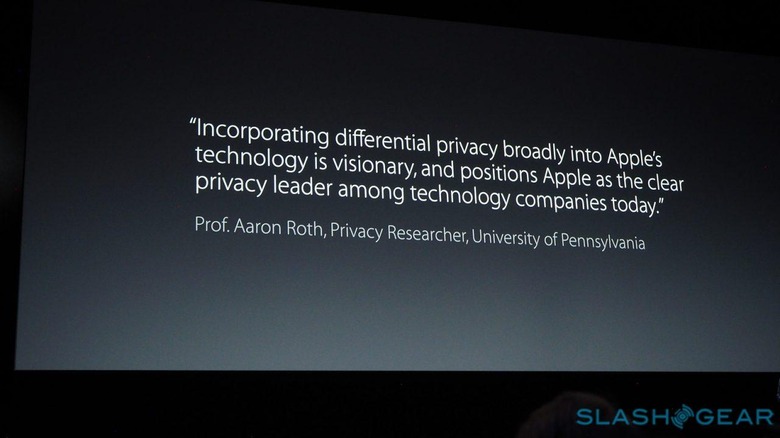

Apple pulled no punches in setting up privacy – and just who has rights to your data as a user of often free services – as a key differentiator between it and its rivals. Google and Microsoft weren't mentioned by name, but Apple's public commitment during the WWDC keynote to avoid things like user profiles and tracking was a clear attack on competitors who prefer to process in the cloud and maybe do a little data mining along the way.

In the process, though, it pushes Apple into a corner. After all, data analytics is an essential part of any platform, not least when you're trying to deliver more accurate and welcome suggestions in Apple Maps, decide which are the most popular tracks to surface in Apple Music, or preemptively spot up and coming words and phrasing to add to iOS' auto-correct.

Apple's Craig Federighi name-checked the answer to that dilemma during the WWDC keynote yesterday, but it was later in the day, at the company's State of the Union presentation, where developers were really introduced to differential privacy.

Apple didn't create differential privacy; in fact, it's been a well-known method by which data can be masked to keep individual records from being extracted.

It works by adding noise to each record, sufficient so that there's no way to know what the original answer was. That makes each individual result worthless when looked at in isolation, but in aggregate you can get the same statistical insight as traditional methods but without the risk of each individual's data being inferred.

There's a good example – without too much math in the process – by privacy researcher Anthony Tockar about how you could figure out individual income from an ostensibly anonymous database of residents in an area.

How does that help in iOS 10? Let's say Apple wants to refine what suggestions it makes for potential restaurants in Apple Maps when someone is looking for a lunch spot. The traditional way might be to log each user and what they tap on, then combine all that data with multiple users.

Apple has said no to such "user profiles", however, and so differential privacy handles it in a more complicated – but less traceable – way.

Each deep link is assigned a unique hash which, as it's encountered by a user, has noise added to it, a fragment extracted, and that fragment sent to Apple. Individually, each fragment is useless: Apple couldn't use it to identify the original user and their selection.

However, in aggregate all those fragments can be combined to figure out the actual preferences, without exposing the individual preferences of any one particular user.

It's clever stuff, though in theory if used to excess it could give Apple sufficient fragments from each person to allow them to be identified.

To stop that from happening, Apple assigns what it calls a "privacy budget", effectively a limit on the number of fragment submissions that can be made from a single person during a set period. Those that do get submitted go into an anonymous pipeline, and Apple periodically deletes fragment donations from the server.

Differential privacy has been around in cryptography circles for several decades, but Apple's public adoption of the approach is likely to bring the most attention to it to-date.

Given the ease by which even just a small amount of metadata can be analyzed to highlight individuals – one group of MIT researchers figured that it only takes about four records of approximate place and time for 95-percent of people in a 1.5 million user-strong mobility database to be uniquely identified – it seems likely that the next big question in privacy won't just be whether we're protected, but how.