Back to Basics: How Google's driverless car stays on the road

Google's self-driving cars are making headlines again, now that they've expanded testing from California into Nevada. Competitors are hot on their tail, but currently Google seems to have an undisputed spot on top of autonomous vehicular design. So how do they do it? With a combination of some incredible software and hardware engineering, using processes developed by both Google and the best and brightest of DARPA's robotic race challenges.

The first step in the process is navigation, something that requires a little more than the Google Maps Navigation functionality on display in Android. (That automated system isn't perfect – it tells me to go the wrong way down a one-way street to get to my house, for example.) Before a Google autonomous car goes anywhere, the route is manually mapped out by a separate human driver, noting any changes in road conditions, obstacles or markers. Once the route is set, a safety driver and software engineer load up into one of the automated cars.

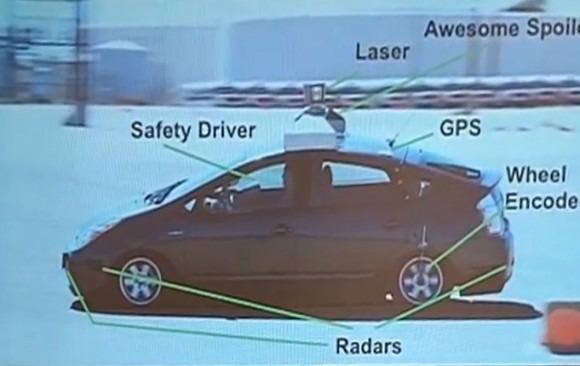

Once the passengers are set, the car loads up information created by one of Google's massive data processing centers into the local computer. The remote computers map the route and the local computer continually processes data from the car's sensors, including a standard GPS sensor, a powerful laser array for "seeing" obstacles, and small radar arrays mounted around the side of the vehicle.

The laser mounted on top of the spoiler is probably the most crucial element for its close-range operation: it creates a three-dimensional image of everything in the immediate area of about 50 feet. The laser compares the immediate surroundings of the car to the measurements taken by the previous manual run, paying special attention to moving objects and taking extra input from a wheel encoder. This allows for much more precise movement than GPS alone, keeping the car on a route accurate to a few centimeters when compared with the previously gathered data.

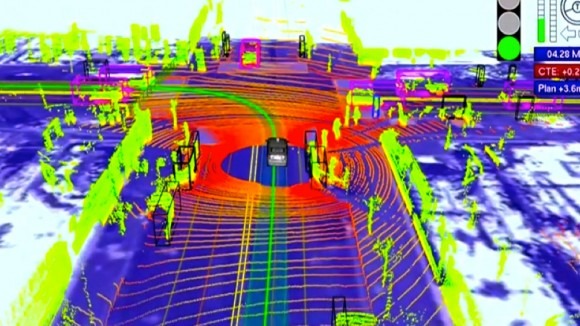

The laser can differentiate between other cars, pedestrians, cyclists, and small and large stationary objects, and it doesn't need light to be able to function. The radar arrays keep an eye any fast-moving objects from farther out than the laser can detect. The front-mounted camera handles all traffic controls, observing road signs and stop lights for the same information that a human driver uses. Google's computers combine data from the laser and the camera to create a rudimentary 3D model of the immediate area, noting for example the color of an active traffic light.

There's a staggering amount of contextual software at work at all times. For managing lane changes, there's an algorithm determining the smoothest route through the surrounding road combining trajectory, speed and the safest distance from obstacles. When coming to an intersection without a traffic light, Google's cars yield the right of way according to traffic laws. But if other drivers don't take their appropriate turns, the Google car moves forward slightly, then watches for a reaction. If it determines that the other driver still won't move, it takes the initiative.

Safety is paramount for Google: none of their cars go anywhere without a separate manual run-through and two human operators (interestingly, something that Nevada's new law requires anyway). But their goals are to create a system that can eliminate the human element in the unavoidably dangerous act of driving. They've been testing for years and have accrued over 250,000 miles of data, but there's still no word on how or when they intend to get this technology into the market.