We can't trust self-driving car AI just yet

Self-driving cars are the future. Almost every car maker, in one way or another, has embraced technology that can take over the wheel partially or completely. It has become not a question of "if" but of "when". Some car makers are convinced that self-driving cars will be hitting the road in two years' time. That, however, might be an extremely optimistic outlook that may miss out on how self-driving car tech just isn't trustworthy yet, and it's not for the reasons you might immediately think.

Life and death

There have been incidents of accidents involving self-driving cars or cars with assistive technologies. Some of them have, unfortunately, even resulted in deaths. It's almost to easy to blame either the technology or the human behind the wheel and, in some cases, investigations do point one or the other as the cause of the incident. Whichever side was culpable, the reactions and coverage of the accidents prove that neither the technology nor humans are ready for that serene picture car makers and self-driving proponents are painting.

Learning machines and Murphy’s Law

Any self-driving car would naturally require some AI to direct it. And no AI is born complete like Athena springing from Zeus' forehead. They have to learn and the way they learn is fundamentally different from how humans learn (thankfully). At their most basic, these AI learn through simulations and devouring thousands if not millions of data that would take humans multiple lifetimes to digest.

But in spite of how fast and how efficiently these machines can learn, they mostly only learn through simulated "experiences". At most, they're tested out in secluded and controlled environments. It has only been recently that they have been legally allowed on real-world roads, where these accidents have occurred. They may have accumulated and learned from thousands of data points, but comparatively fewer of those points come from real-world tests. In other words, their real-world data set is still small.

But what is there to learn in the real world that AI couldn't have learned artificially? Those would include the seeming randomness of nature, the frivolity of human nature, and Murphy's Law. Weather conditions could change at a moment's notice, throwing off sensors when you need them the most. Human drivers could suddenly cut you off or swerve and human pedestrians could suddenly decide to imitate the ill-fated chicken. While computers are definitely faster at computing a course of action or compensating for a change in conditions, they will still need data to know how to best react to a situation. And in that, they are still infants learning how to drive safely.

Hardware is hard

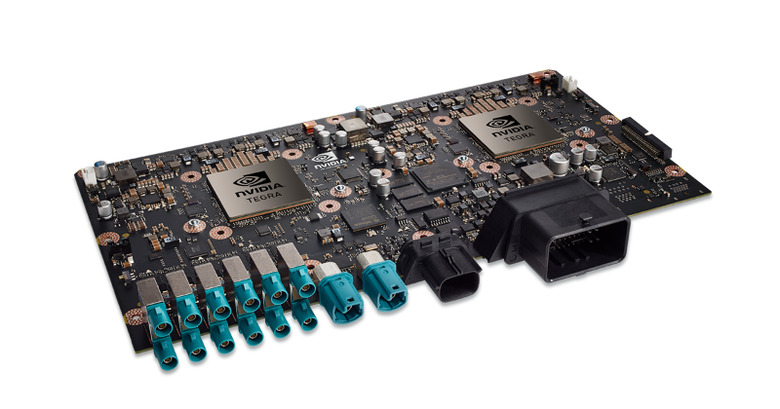

You might think, and rightly, that AI is predominantly made of software, algorithms, and digital data. But all of that need hardware to run on as well. And the kind of AI that's needed to make split-second calculations and decisions require hardware that is more powerful than our smartphones. And few of the self-driving cars have that kind of hardware yet.

According to Ars Technica, Elon Musk just recently revealed that Tesla was ditching NVIDIA's AI-centric Drive PX 2 chips for their own custom ones, designed with the help of former Apple A5 processor engineer Pete Bannon. We'll leave it to the companies' marketing teams to argue whether there's truth to claims of better performance, but there is one point that this change of allegiance does make: the computational hardware in self-driving cars aren't specifically made for self-driving cars.

True, NVIDIA's AI platform is designed specifically for, well, AI, but it has to design it in a general way to embrace all types of AI research and applications. And not all of these applications are created equal. For a self-driving car AI, speed is even more critical than image processing, deep learning, and the likes. Almost all car manufacturers uses computer hardware they buy or license from the likes of NVIDIA. Most focus on the software and user experience with less thought to the silicon that can mean the difference between life and death.

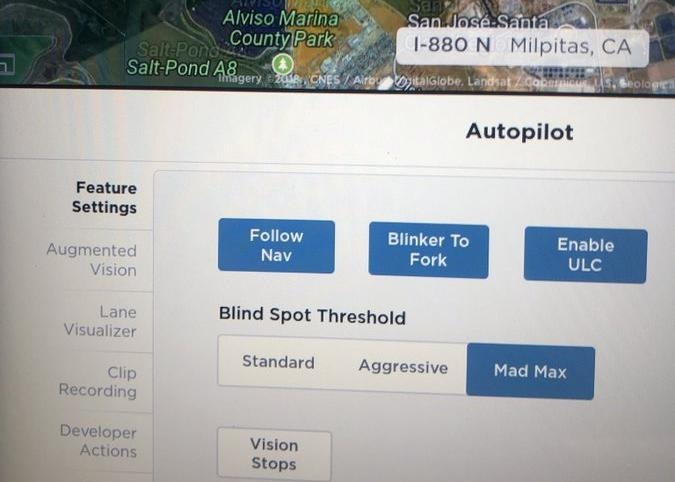

Assisted or Automated

But it isn't just technology that isn't ready yet. Right now, we humans are already confused about the inconsistency between various "smart" car technologies being pushed by car brands. On the one hand, some are already preaching "hands off" automation. On the other hand, others like Tesla are insisting their features are merely assistive and still requires complete driver attention. The confusion between the two has already cost at least one life.

It might be easy to say that the driver is ultimately responsible for knowing the difference, but car makers and sellers aren't helping to make it easier. Drivers and passengers are less likely to trust in either when they're not sure which is which. And this distrust will pretty much carry on when cars eventually shed off even assistive driving, which will probably not happen for a long time. It's a state of uncertainty that makes it almost impossible to trust a car that can drive itself, whether in short bursts or for hour-long drives.

All or nothing

There is one other thing that car makers don't exactly admit upfront. Their vision for self-driving cars are mostly set in a future where self-driving cars are the norm rather than the rare exception. In that future, self-driving cars will have it easy because all other cars on the road are just like its self: efficient, precise, and controlled. In other words, they work best when among their own kind.

By 2020, however, we will still have a mix of self-driving cars and human-driven cars. Almost all self-driving cars will behave as laws and traffic regulations permit. Some humans will do the same. Others, however, will fall back on the human tendency to try to get away with something if they can. Elon Musk once mentioned that self-driving cars will always yield and are easily bullied. Imagine when, unpredictable as they are, some drivers decide to use that to their gain.

Security matters

And then there's the elephant in the room that car makers don't seem interested in addressing. At least not until it's too late. Self-driving cars are, by nature, dependent on computers that have control over the entire car and on networks that let the car communicate either with some remote site or even with the passengers' phones. As such, they are susceptible to getting hacked.

It has already been proven through studies and research, but fortunately on a small scale and contrived conditions. But why wait for when self-driving cars become more widespread? At the moment, security doesn't seem to be a high-priority and is simply a checkbox on a list. Given that attitude and humans own poor security practices, it shouldn't come as a surprise when car hacking becomes as common as self-driving cars in the future.

Wrap-up: The fifth element

They say that trust is hard to give but easily broken and that's when dealing with other humans. How much more difficult would it be to trust a fancy new technology that asks you to put your life in the hands of an invisible and impersonal entity. It will be difficult to put our trust in self-driving car AI even in the next year or two not only because the technology is not there yet but also because we are largely still unprepared to accept it. Car makers and AI proponents will have a lot of work to do, from communication to certification to regulation, before humans can really sit back and relax and just enjoy the ride.