These Facebook robots are training more cunning AI

Facebook has revealed the fruits of its robotics division, driving artificial intelligence research and coming up with some creepy bug-like 'bots in the process. Though robots capable of walking, using tactile sensors to better manipulate new objects, and even showing curiosity may sound like a strange endeavor for a social network, Facebook argues that they're just what its AIs need.

"Doing this work means addressing the complexity inherent in using sophisticated physical mechanisms and conducting experiments in the real world," the team at Facebook argues, "where the data is noisier, conditions are more variable and uncertain, and experiments have additional time constraints (because they cannot be accelerated when learning in a simulation). These are not simple issues to address, but they offer useful test cases for AI."

At the root of most of their efforts is self-supervision. That's basically where, rather than training an AI to deal with a specific task, the robots can learn directly from the raw data they're presented with. That paves the way for far more capable AIs which are able to generalize their experience and better tackle new tasks.

Creepiest of the experiments Facebook is detailing today is probably its hexapod robot. The six-legged 'bot begins with no understanding of either its physical capabilities or the world around it. A reinforcement learning (RL) algorithm then kicks in, as the robot effectively teaches itself the best way to walk,

That involves understanding its joint sensors, figuring out its own sense of balance and orientation, and then combining that self-awareness with a growing experience of the real-world around it. "Our goal is to reduce the number of interactions the robot needs to learn to walk, so it takes only hours instead of days or weeks," Facebook says. "The techniques we are researching, which include Bayesian optimization as well as model-based RL, are designed to be generalized to work with a variety of different robots and environments."

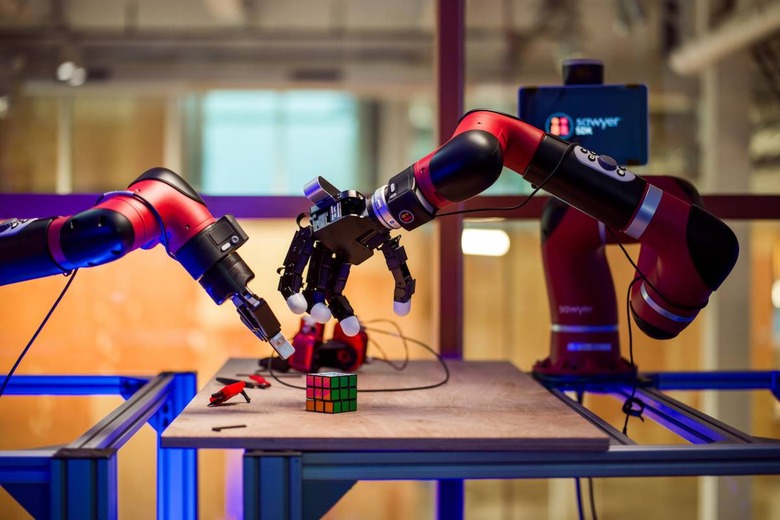

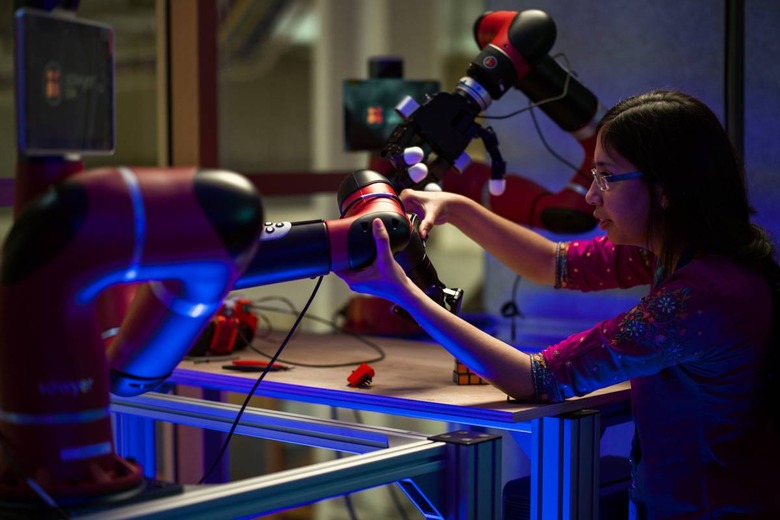

In another experiment, Facebook is looking at how it can generate curiosity in robots. It used robotic arms and a model which had specifically been designed to not only reward completion of a task, but in the process reward a reduction in uncertain of the model. That, it's suggested, could help a robot inadvertently trap itself while focusing single-mindedly on completing a task.

"The system is aware of its model uncertainty and optimizes action sequences to both maximize rewards (achieving the desired task) and reduce that model uncertainty, making it better able to handle new tasks and conditions," Facebook explains. "It generates a greater variety of new data and learns more quickly — in some cases, in tens of iterations, rather than hundreds or thousands."

Finally, there are new tactile sensors. Rather than focusing primarily on computer vision, Facebook is using soft touch sensors co-developed with researchers from UC Berkeley. These tactile sensors produce high-dimensional maps of shape data, which the AI learned to interpret. When subsequently tasked with a goal, the AI was able to figure out how to roll a ball, move a joystick, and identify the correct face of a 20-sided die, without having been first fed task-specific training data.

Facebook doesn't just want to make better robots – though taking on other projects, like Boston Robotics, could be an interesting side-project for the firm. Instead, it wants to see how artificial intelligences could be more effective when trained with the messy, often imperfect data of the real world. That could mean AIs better able to intuit the meaning of content (and thus potentially vet problematic Facebook posts more readily), react more effectively in uncertain environments and learn new tasks without needing human involvement, and even improve A/B testing and help power other new Facebook features.