Tesla's going to leapfrog rivals with Model 3 Autopilot

Tesla's Model 3 could take pole position in a new breed of near-autonomous vehicles, with the EV debuting a new generation of sensors perfect for real-time crowdsourced data. The upcoming car – which is shaping up to be Tesla's most popular to-date, with over 325,000 people coughing up a $1,000 deposit to stake a spot on the reservations list – is the prime candidate for a new, lower-cost sensor array developed by Tesla hardware supplier Mobileye that's said to offer autonomous intelligence hitherto the realm of R&D labs, but at a fraction of what current hardware costs.

That expense is a barrier that automakers can't afford to ignore. Traditional sensors and real-time mapping systems like LIDAR laser arrays cost tens of thousands of dollars; Ford, for instance, was particularly excited at CES to unveil the newest example from Velodyne which will allow it to use only half the number while still cutting costs and extending operational range.

In a recent report, ABI Research highlighted the need for not only advanced sensors but high-definition maps and the ability for cars to effectively crowdsource data in real-time so as to better adapt to road conditions, along with unexpected or temporary hazards.

"As connected vehicles include more low-cost, high-resolution sensors," Dominique Bonte, Managing Director and Vice President at ABI Research, pointed out, "cars will capture and upload this data to a central, cloud-based repository so that automotive companies, such as HERE, can crowdsource the information to build highly accurate, real-time precision maps."

It may very well be Tesla, though, which pushes the envelope on popularizing just that sort of technology. A report from Globes in late March revealed Tesla CEO Elon Musk paid a secret visit to Mobileye in Israel to see the latest fruits of the company's research.

Built atop two prototype Model S cars, the system aims to do more than enable the vehicle to recognize pre-programmed hazards and road signage. Instead, a "digital neural network" (DNA) would allow it to effectively learn while it's driving.

So, the Mobileye-equipped car could potentially identify which areas of the road were empty but not strictly defined, such as the hard shoulder or an unraised sidewalk, and even to create a safe path down roads without markings. Currently, the Autopilot system available on the Model S and Model X requires clearly delineated lanes which it can follow.

The DNA system would also be able to identify over 1,000 signs and different types of road markings, it's said, covering the styles predominant in a wide variety of countries.

Tesla has already demonstrated, with the effectively overnight-enabling of Autopilot on the Model S courtesy of an OTA upgrade, that's it's willing to buck the usual automaker trend of very gradual real-world trials and instead get cutting-edge features out to drivers sooner rather than later.

That might make it an ideal candidate to push this new hybridization of detailed mapping and real-time environment analysis. According to the Israeli business paper, the system will support only partial activation, too, allowing Tesla to stay close on the heels of regulations by enabling different features while keeping some of the more advanced abilities held in reserve.

Although the company has played the Model 3's exact Autopilot skills fairly close to its chest, Musk did confirm at the upcoming car's launch late last month that every one would include all the necessary hardware by default. The safety aspects of Autopilot – which include things like side-collision warnings – will be standard features, though the more autonomous technology will be an option.

Meanwhile, Musk has also been dropping hints about a bold new dashboard design, particularly around the steering wheel. Responding to criticisms of the wheel found in the prototype cars Tesla demonstrated at its launch event, Musk teased that the final system "feels like a spaceship", prompting speculation among some that Autopilot's self-driving abilities would be moved even further to the fore.

One possibility, for instance, is that the steering assembly and the 15-inch touchscreen that comprises the Model 3's entire driver display and infotainment system could be articulated. That could push the touchscreen – and a steering wheel assembly – closer to the driver when the car is being piloted manually, but stow that steering wheel and move the display into a more entertainment-focused position when Autopilot is engaged.

Volvo previewed one interpretation of such an approach with its Concept 26 dashboard at the LA Auto Show in 2015. There, the steering column retracts as the driver's seat pulls back, while the passenger-side portion of the dashboard rotates to reveal a larger touchscreen interface.

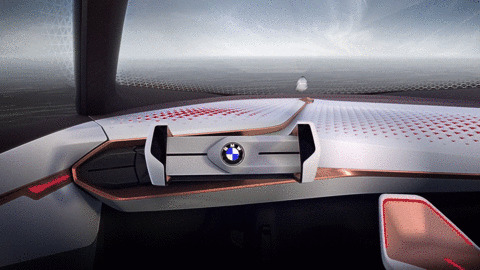

Similarly, Mercedes-Benz's F 015 Luxury in Motion concept has a retractable wheel for its autonomous mode, and even allows the front seats to rotate a full 180-degrees to turn the interior into a lounge. BMW's Vision Next 100 hides the wheel too, in addition to drawing back the dashboard.

That sort of layout would require a considerable degree of confidence in the autonomy system, of course. Current approaches keep the steering wheel within ready reach, and indeed prompt the driver to keep their hands on, or at least nearby, it lest they be required to take back control at short notice.

Even with existing self-driving options, owners of the cars can over-estimate the abilities of their vehicles. Tesla pushed out limits to its Autopilot system after some owners filmed themselves activating the technology and then leaving the driver's seat – in its latest iteration the Model S and Model X require a physical presence in the seat to remain operational – while an Infiniti Q50 driver demonstrated that car's lane-departure and active lane control capabilities by riding shotgun on the highway, despite the automaker's cautions otherwise.

Technologies like those Mobileye is apparently working on, better able to react and accommodate areas of the road where lane markings or pre-configured mapping fall short, could help Tesla refine Autopilot so that it can take control in more situations.

Still, there are hurdles to jump in the meantime. Right now there's no single, common language for vehicles to use to communicate crowdsourced data between manufacturers. Since acquiring HERE, the consortium of Audi, BMW, and Mercedes-Benz parent Daimler have indicated that future cars from all three firms could become mobile mappers, but none has publicly committed to using HERE's common sensor language.

Tesla's cars equipped with Autopilot also intercommunicate, with the system self-learning as it builds experience on the road, but so far that data is kept to the company alone.

It's been suggested that only when low-latency 5G networks become commonplace will the sort of car-to-cloud connectivity required for the sort of data exchange essential for safe and pervasive autonomous driving become practical.

Before that full autonomy – Levels 4 or 5, according to the generally-accepted hierarchy of self-driving technologies – might come more collaborative systems, Tesla's advancing Autopilot being one and Toyota's "Guardian Angel" another. That might not involve the ability to hit a button and drive from A to B completely hands-off, but could well cut road fatalities, accidents, and boredom on previously mundane journeys.