Prototype lie detection software analyzes words, gestures

Researchers at the University of Michigan have used real court case data to develop lie detection software. The software, which is currently in the prototype stage, eschews the typical polygraph methodology in favor of gestures and words. By doing so, the software has been up to 75-percent accurate in identifying liars in trials, eclipsing humans' ability to figure out if someone is lying.

The researchers used 120 videos of real court cases from The Innocence Project and more to train their software on spotting lies. By doing so, they had more accurate examples of people lying — while volunteers could be motivated to lie in a high quality manner with the proper reward, those in high stake situations (that is, facing jail time) have more motivation to lie, and are better examples for the software.

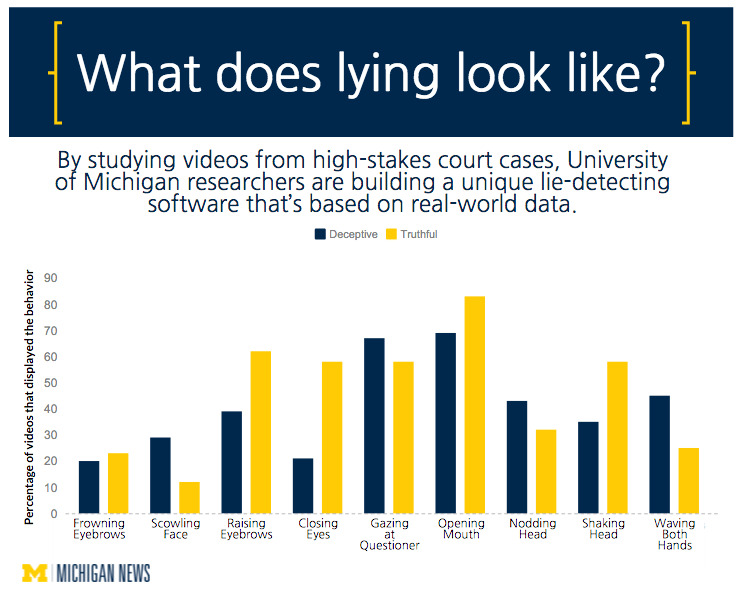

As a result of their work, the researchers found different "tells" that indicate someone may be lying. Among these are an excessive certainty to their speech, hand movements, too much eye contact, head nodding, and scowling. Frowning eyebrows were less certain, being slightly more common among truthful situations, while closing eyes and head shaking were far more common when someone was telling the truth.

Verbal clues also indicate whether someone is being truthful. The researchers found, for example, that saying "um" often is a common sign of lying, as well as verbal distancing like avoiding the words "we" and "I". The researchers are working on adding other data to the software, including physiological info like body temperature changes and heart rate data; doing so could help improve accuracy.

SOURCE: University of Michigan