Google Lens new filters start rolling out to iOS, Android

Google Assistant can be pretty smart about the things you ask it but it has the limitation of requiring you to speak out your question or at least type it. There are things, however, that are not exactly to ask out loud and are better expressed through images. That's where Google Lens comes in, sort of like the visual side of Google Assistant. A new update that's now rolling out to both iOS and Android users makes it even smarter by letting you know the most popular dish in a restaurant just by pointing it at the menu.

Just like the now discontinued Google Goggles, Google Lens lets you see the world through Google's eyes. Every real-world object becomes a point of interest that, thanks to a mix of machine learning, computer vision, and Google's Knowledge Graph, is also something you can learn more about. That's handy for identifying unfamiliar landmarks or products that caught your fancy.

Not everyone travels to new places, however, nor does everyone immediately want to buy items they see in public. At I/O 2019 earlier this month, Google revealed new features that could be more useful for more people every day. And, yes, one of those involves seeing translated text right over the original foreign language.

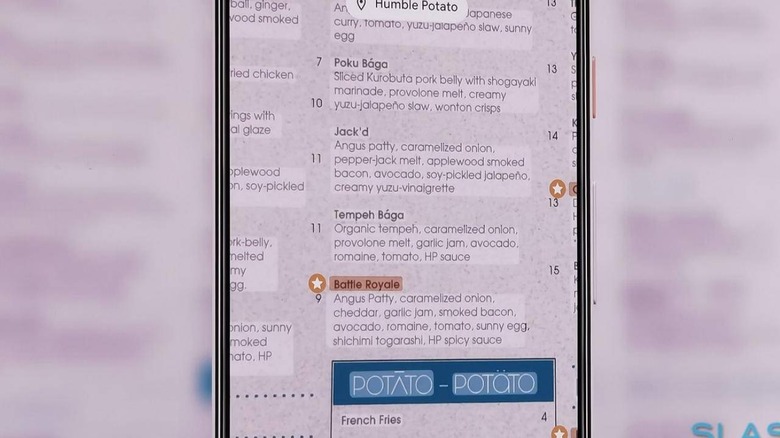

The biggest new feature is probably the Dining filter. Simply point Google Lens at a menu (with the restaurant name visible) and it will overlay popular dishes. You can tap on one and see what it looks like, including what others are saying about it. And when you're done, point Google Lens at a receipt and it will calculate the tip or split the bill for you.

Google Lens is available on Android via Google Assistant and the Google Photos app. iOS users can access it via the Google app and the Google Photos app. These new filters are rolling out to all users but Android phone owners should note that it will only work for them if they're phone is ARCore-compatible.