Google Lens: Assistant and Photos get a super smart update

This week Google revealed that they'll be making a new push for their "Google Lens" initiative (and eventual app integration). Much like what we've seen in the past with things like Google Goggles, Google Lens is about to make the things Android smartphones see – recognizable. As Google suggested today, Google Lens will be a "set of vision-based computing capabilities."

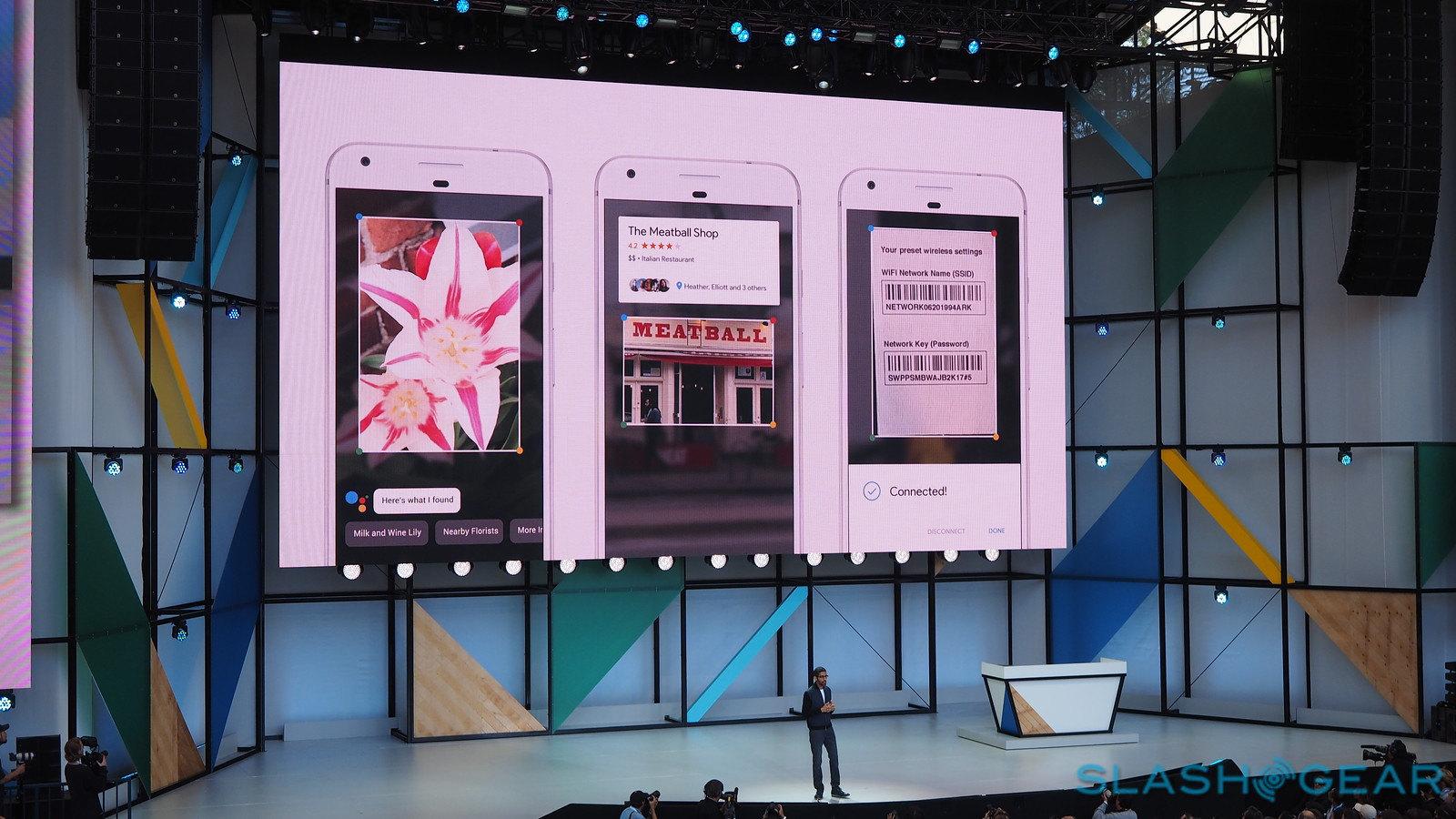

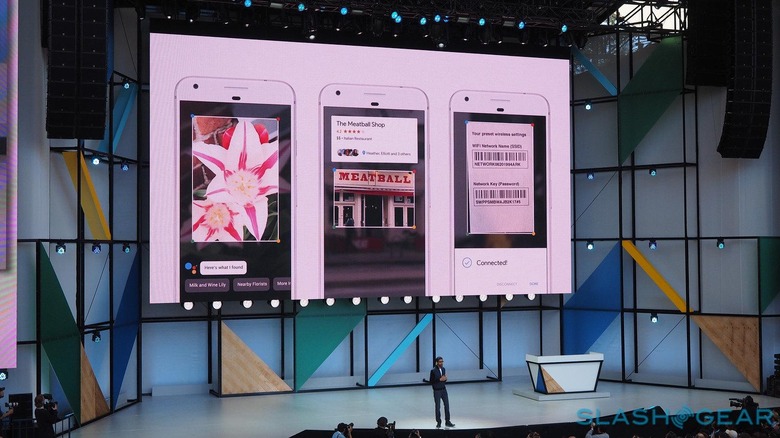

The first places that Google Lens will be coming is in a couple of apps for Android devices. Using Google lLens, users will be able to identify objects in Google Assistant. Also in Google Photos, Google Lens will be able to identify new objects it wasn't able to identify before.

As you'll see in the chart shown by Google here, Image Recognition has become incredibly accurate over the past several years. A far better Vision Error Rate occurs now in computer algorithms than in the human brain – or so they suggest. Users will be able to use Google Lens to identify the world around them.

Users will be able to – for example – see a set of codes on a Wi-Fi router and attain the username and password. It has OCR – which is amazing. This new system is also able to point at restaurants and decide which restaurant is in its field of vision. It does this in concert with Google Maps info and GPS.

Google Lens also works with Google Translate. At a restaurant, users will be able to translate a menu from any language covered by Google Translate to any other language – Japanese to English, for example.

At the moment we do not know the exact time and date of the update to Google Assistant and/or Google Photos. When the update happens, we'll bring you the links to the APK download for both app updates. For now, stay tuned as SlashGear continues to cover all Android updates throughout the Google I/O 2017 event series!