Ford might just move the driver, not go driverless

Ford's new Palo Alto research center may have driverless cars on the menu, but technology shifting the human driver from the car to across the country might be closer to primetime if engineers have their way. Virtual valets and remotely-piloted car sharing schemes could take advantage of increasingly electrified cars and faster LTE networks, Ford's Mike Tinskey explained to me, with a controller potentially thousands of miles away taking the wheel when a local driver isn't available or practical. Right now, that means going on a joyride in an Atlanta parking lot, when you're actually sat at a Logitech gaming wheel in California.

Unfortunately there's not a 600+ horsepower Ford GT at the other end of the wireless connection. Instead, Ford's engineers and their partners at the Georgia Institute of Technology in Atlanta outfitted a somewhat less powerful golf cart with the necessary kit.

In Palo Alto, you sit at a triple monitor rig with the Logitech wheel and pedals, and see what's in front of the golf cart through streaming video cameras. Exactly how much of the periphery is visible is customizable; initially, Ford showed us a relatively broad view spread across all three displays, but you can cut that down to a single camera if you don't have the screen real-estate for it.

After that, it's a matter of using a single pedal to control the accelerator, and steering around the lot. Ford overlays some green and red lines to indicate the width of the cart and where the current steering angle should take it, but in this current implementation the sensor load is low. There's a local kill-switch, in case you decide to go on a remote rampage and crush cones, but none of the sensors Ford says it would expect to fit were the product commercialized.

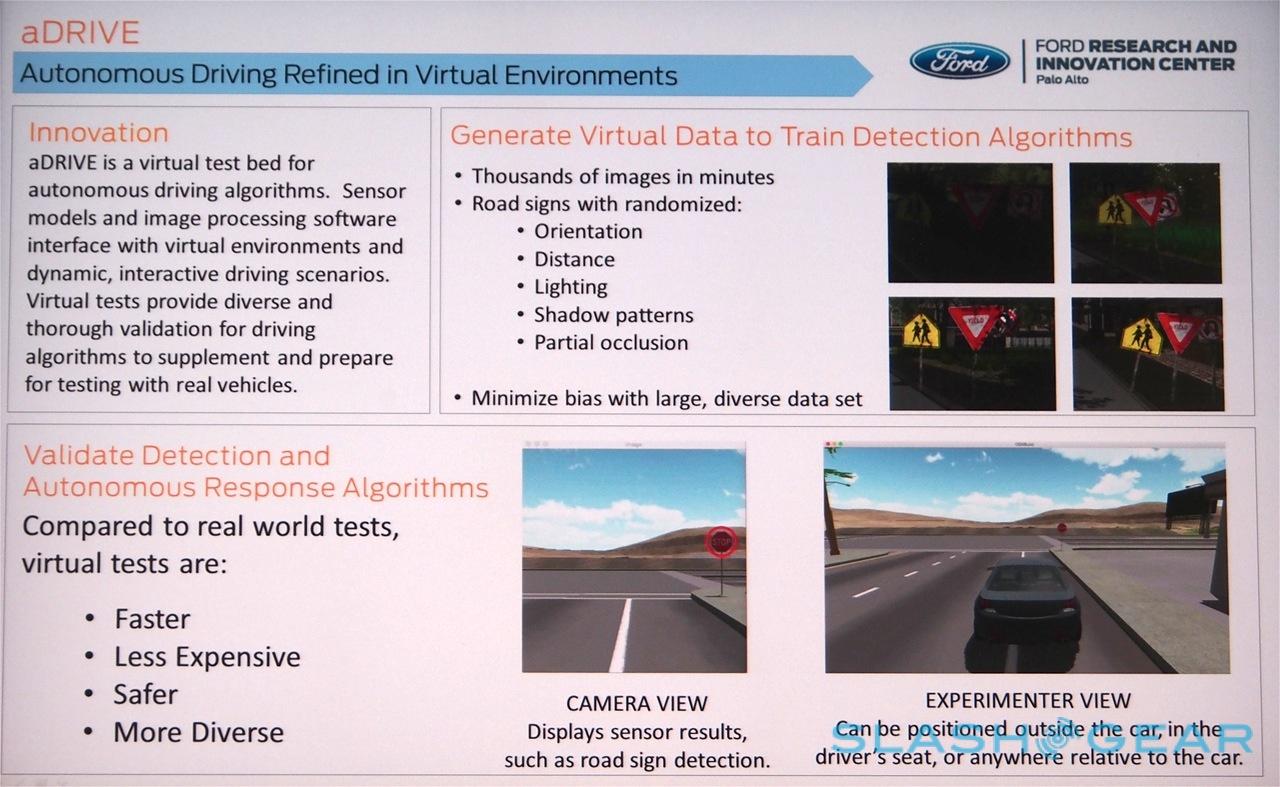

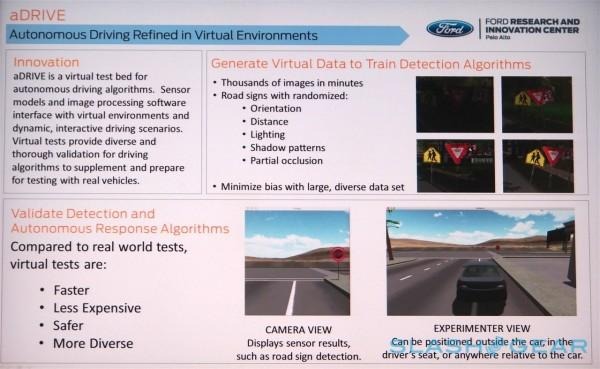

Those sensors would likely do object detection, such as spotting pedestrians about to cross in front, but they could also tie into more advanced AI that another team at Ford's R&D lab is working on. Part of a division led by Jim McBride, who has spent a decade on autonomous vehicles – including DARPA projects – aDRIVE uses game engines to build a virtual driving simulator against which sensor models and image processing tech can be tested.

Rather than send cars like the Fusion Hybrid Autonomous Research Vehicle that Ford is loaning to Stanford out onto the streets every time there's a new sign-identification build to put through its paces, Ford can set the autonomous leaning algorithms loose into a virtual road system.

That also allows testing density to be far higher: you can pepper the streets with stop signs and speed warnings, for instance, at a far greater density than you'd ever encounter in the real world, while those signs can also be rotated, partially obscured with paint or snow, or have their sizes and shapes changed.

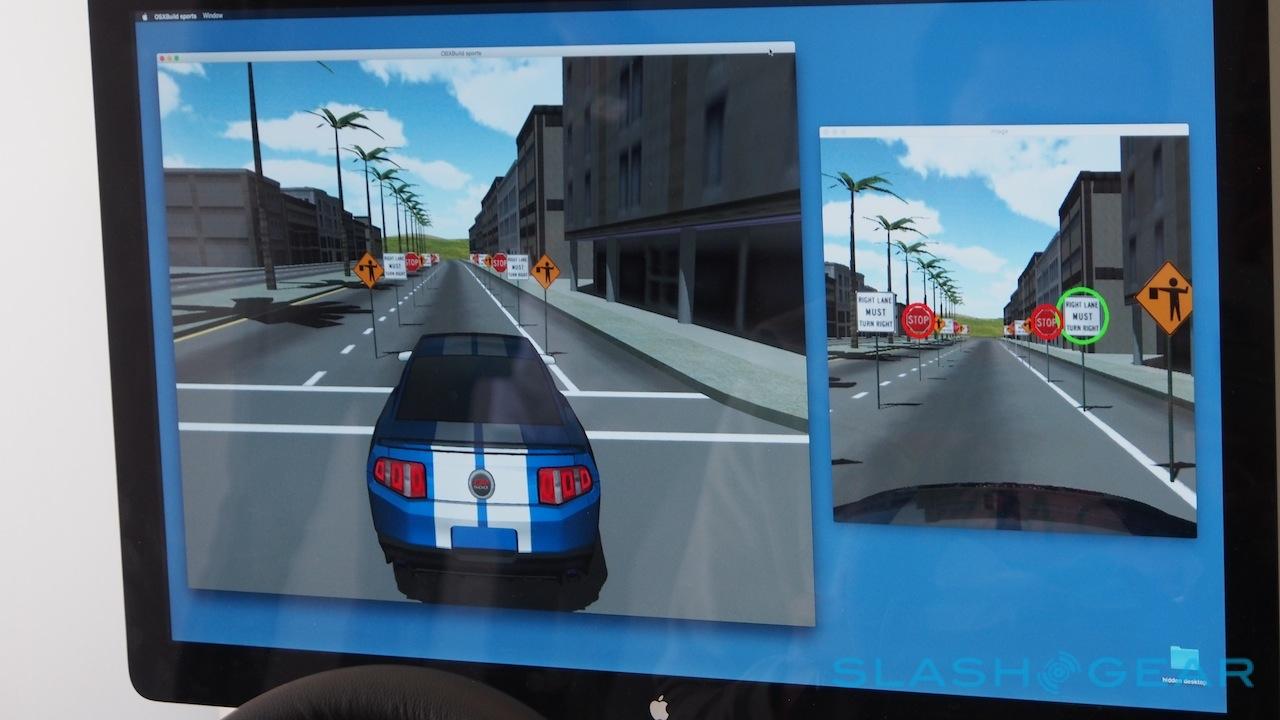

"The reason we're using game engines is because games are getting very realistic now," Ford computer vision engineer Arthur Alaniz explained to me. Currently, the team is experimenting with various different underlying engines – they declined to name specifics – hunting for the most efficient one. The AI spots signs and flags them with different colored boxes and circles, reading things like speed limits or lane guidance.

It's technologies like these which leaves Ford confident that – contrary to what map specialists like Nokia HERE might suggest - you don't need to have a perfect understanding of every territory before you can set a self-driving car loose in it. Instead, Alaniz argued, it's just a case of having a different, and perhaps more cautious algorithm in command, reacting to the topography and signage in real-time.

Remotely piloting a car rather than leaving it entirely to a robot driver may not be as dramatic, but it could be market ready a whole lot sooner. Ford hasn't committed to actually launching it yet, but the assumption is that the legal hurdles holding back fully-autonomous vehicles could be less strenuous to pass if there's still a human in command, even if they're not physically present.

The upshot might be car sharing schemes where vehicles could be moved around an urban environment to better suit driver demand, or parking garages where virtual valets could squeeze cars into spaces without having to leave room for doors to be opened.

Is it practical? "As cars get more electrified, it gets easier and easier to do this in the real world," Tinskey told me, though the limits of the off-the-shelf technology Ford is relying upon are being felt. Already, the team has encountered a noticeable increase in latency when students at the Georgia Institute of Technology get out of class, bandwidth on the 4G network Ford is relying upon becoming narrower.

"I wouldn't say it's ready for prime time," Tinskey concludes, "but it's giving us some interesting results."

Curious what else Ford is working on beside self-driving cars? We went behind-the-scenes