Car assistance tech like Autopilot is misleading says study

Driver assistance systems like Tesla Autopilot are confusing potential users and even leading them to unsafely assume the tech is more capable than it is, according to new research from one vehicle safety organization. A new study by the Insurance Institute for Highway Safety (IIHS) suggests that poor understanding of just what systems like adaptive cruise control and lane-keeping assistance are able to do – and what they can't – is undermining the potential safety benefits of such technologies.

While autonomous vehicle research is underway across both the car and tech industries, and has been for some time, there are currently no truly self-driving cars on the market. Instead, by the generally adopted standards of driving automation developed by SAE International, commercially available vehicles top out at Level 2 Advanced Driver-Assistance Systems (ADAS).

At that point, the SAE says, "an automated system can assist the driver with multiple parts of the driving task." However it's not an opportunity to nap in the rear seat. "The driver must continue to monitor the driving environment and be actively engaged," the organization points out.

What's in a name?

For that sort of full exchange of responsibility over to the vehicle's systems, you'd need a Level 4 or Level 5 system. The IIHS' concern, though, was that the way some of the driver-assistance technologies currently available are named, they could lead less tech-savvy drivers to assume that's what they're getting. "Despite the limitations of today's systems," the Institute said today, "some of their names seem to overpromise when it comes to the degree to which the driver can shift their attention away from the road."

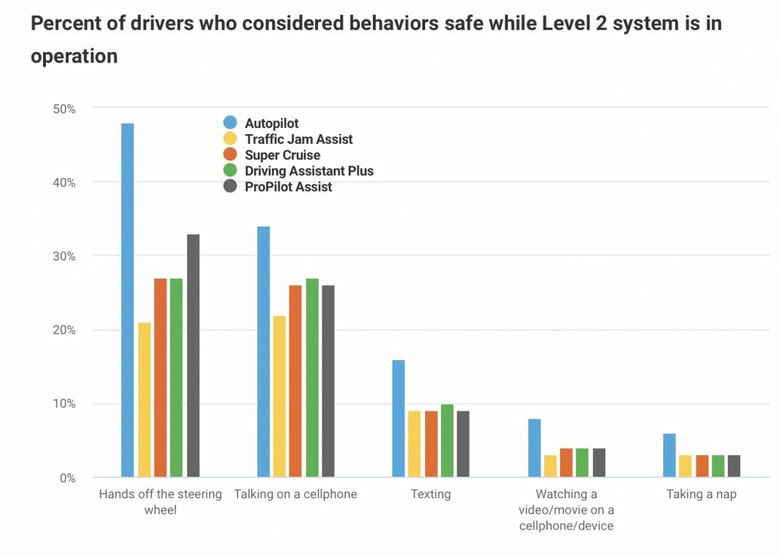

It asked more than 2,000 drivers about five of the more advanced systems available today. As well as Tesla's Autopilot, that included Audi and Acura's Traffic Jam Assist, Cadillac's Super Cruise, BMW's Driving Assistant Plus, and Nissan's ProPilot Assist. Participants were told the name of the system, though not the automaker, and given no further details about what the technology consists of or indeed promises on the road.

Of the names, Autopilot gave the most cause for concern. 48-percent of people said they thought a system called Autopilot would make it safe to take your hands off the wheel, versus 33-percent or less responding to the other technologies. That's despite only one – Cadillac's Super Cruise – actually allowing such operation, since it relies upon gaze tracking instead.

"Autopilot also had substantially greater proportions of people who thought it would be safe to look at scenery, read a book, talk on a cellphone or text," the IIHS reports. "Six percent thought it would be OK to take a nap while using Autopilot, compared with 3 percent for the other systems."

Just what is the car doing?

It's not the first time that driver-assistance tech nomenclature has been questioned for its potential to mislead owners. While the technical abilities of such ADAS are generally viewed positively by experts, the way those abilities – and, conversely, their limits – are communicated has been less impressive. Tesla, for example, has long maintained that despite calling its system Autopilot, it makes clear when the feature is enabled that drivers must keep their hands on the wheel and pay attention to the road conditions.

The ways in which ADAS communicates with drivers is another point of concern. In a second IIHS study, the organization says, the way vehicles display their system status through the instrumentation was examined. Eighty drivers viewed video of 2017 Mercedes-Benz E Class using the Drive Pilot system – selected because its interface is typical of such displays – and were asked about what the ADAS was trying to inform them.

Despite half of the group being primed on what the iconography meant, most of the eighty struggled with key aspects of the system when in use. A car spotting a vehicle ahead and adjusting speed automatically was readily comprehended, but a vehicle ahead being visible to a human but out of sight of the car's detection sensors – and thus not showing up on the dashboard – was generally not understood.

"Most of the people who didn't receive training also struggled to identify when lane centering was inactive," the IIHS continues. "In the training group, many people got that right. However, even in that group, participants often couldn't explain why the system was temporarily inactive."

There's no easy answer to the Autopilot dilemma

The balance between providing too little information and, conversely, delivering system status overload is a tricky one. The IIHS suggests training of ADAS technologies at car dealerships during an orientation session might help. It' questionable, though, just how much of that information would be retained when a new vehicle owner is in the honeymoon period with their car.

Most systems only show a basic graphic of a car when a vehicle is recognized ahead. Here, Tesla is actually fairly unusual in that its Autopilot interface shows live, moving representations of other vehicles ahead of, and to the side of, the driver's car.

The differences between each vehicle's displays – and the lack of clear guidance for communicating ADAS status from regulators or industry bodies – may well be why Level 3 vehicle development has effectively stalled. At that point, while the car is expected to be able to drive itself autonomously, the human behind the wheel is still required to step in should that be required. It's a blurred line situation that has led some automakers to focus on Level 4 and higher instead, not because Level 3 technologies don't exist, but because the human involvement can't necessarily be counted on.