Kinect Bringing Sight To The Blind, Sorta.

Some graduate students at Universität Konstanz in Germany put together a project based on Microsoft's popular Kinect system. Instead of using the system as a gaming controller, they take the Infrared camera's visual data from a helmet mounted Kinect and uses it to relay audio instructions through a wireless headset. This could possibly give the blind warnings about obstructions and directions at a larger distance than the current white cane and/or seeing eye dog system in popular use today. They call it the NAVI, Navigational Aids for the Visually Impaired.

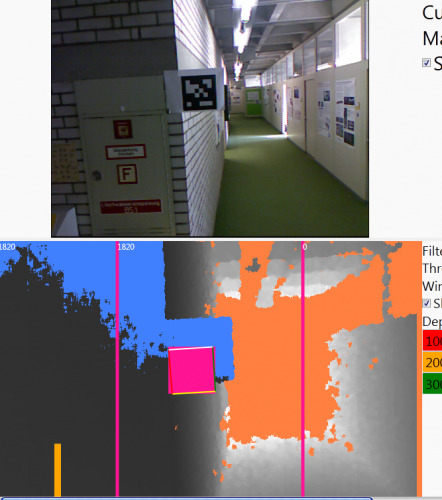

The pair of students wanted to do more than just implement that system. They also put together a limited Augmented Reality system utilizing the standard camera mounted alongside the dual IR cameras that allow the Kinect to have stereoscopic vision. The AR system is set up to read various AR bitmap tags like the one pictured below. This allows the system to work with the external world to give the handicapped access to more information. It also works to prove other AR concepts like having bit mapped tags trigger virtual events.

So for example, if you walk towards a door the output will be "Door ahead in 3", "2", "1", "pull the door" where each part of the information depends on the distance to the marker on the door.

These systems put together allow the student to pass blindfolded through a short course with the NAVI working as a guide. The team put together this short demonstration video showing the components and operation of the system. They talk a little bit about the vibro-tactile arduino system in the belt, but I don't quite understand what's going on there. I'll venture a guess that as the user gets closer to walls the belt will begin to vibrate harder as a tactile warning system.

[via HCI Blog @ Universität Konstanz]