From Cyborgs to Project Glass: the Augmented Reality Story

Google's Project Glass has been through the usual story arc – rumors, a mind-blowing concept demo, rabid excitement, practicality doubts and then simmering mistrust – in a concentrated three month period, but the back story to augmented reality is in its fifth decade. The desire to integrate virtual graphics with the real-world in a seamless way can be traced back to the days when computers could do little more than trace a few wireframes on a display; it's been a work-in-progress ever since. If Google's vision left you reeling, the path AR has taken – and where it might go next – could blow your mind.

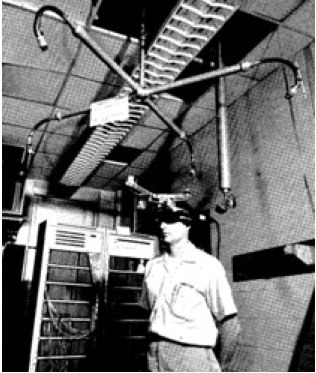

In fact, the first real example of augmented reality was demonstrated back in 1968, with Ivan E. Sutherland of the University of Utah in Salt Lake City showing off a head-mounted three dimensional display. He described [pdf link] how "kinetic depth effect" – projecting two slightly different images to the user's eyes, that shift in accordance to their movement – could be controlled by different location-tracking systems, though the graphics themselves were merely wireframe outlines.

Sutherland's idea – although seemingly obvious today – was that if the tracking sensors were accurate enough and the computer-created graphics sufficiently responsive, the human brain would comfortably combine them with the real-world view. "Special spectacles containing two miniature cathode ray tubes are attached to the user's head" Sutherland wrote. "A fast, twodimensional, analog line generator provides deflection signals to the miniature cathode ray tubes through transistorized deflection amplifiers. Either of two head position sensors, one mechanical and the other ultrasonic, is used to measure the position of the user's head."

[aquote]Augmented reality was coined by Boeing engineers in 1992[/aquote]

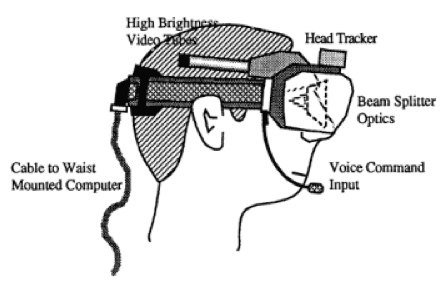

"Augmented reality" as a term, meanwhile, was coined several decades later by Boeing engineers Tom Caudell and David Mizell. They created a head-mounted display of their own, dubbed "HUDset," in 1992, which could overlay airplane wiring blueprints over generic looms. As with Sutherland's system, a combination of head-tracking and individual displays for each eye were used, though the computer itself could be waist-mounted.

Caudell and Mizell also identified one of the key advantages of augmented reality over virtual reality – that is, an entirely computer-generated world – namely that it was less processor-intensive. Most of the user's perspective would already be supplied, with the AR system only needing to busy itself with the added details.

However, that opened up a problem of its own: the importance of accurate location and positioning measurements so that virtual graphics would correctly line up with real-world objects. It's worth remembering that GPS only became fully operational in 1994, and it wasn't until the new millennium that regular users had access to the accurate data the military had previously solely enjoyed. However gross positioning information also needed to be supplemented by far more precise information as to head angle, direction and more, unless Sutherland's original principles would be missed.

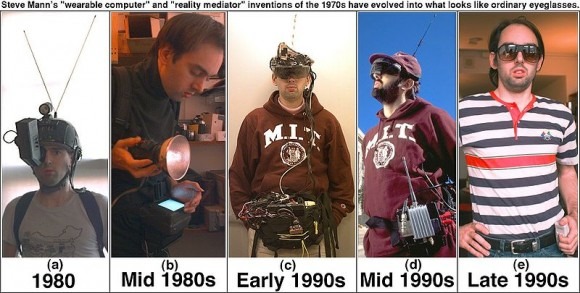

While the Boeing system was intended to be used in controlled manufacturing environments, AR and wearables pioneer Steve Mann took the technology out into the wilderness. A founding member of the Wearable Computers group at MIT's Media Lab, now a tenured professor at the Department of Electrical and Computer Engineering at the University of Toronto, Mann actually prefers the term "mediated reality" to AR, and since the 1980s has been sporting wearable computers of his own design. Between 1994 and 1996 he live-streamed the view from a wearable webcam to a publicly-accessible website, receiving messages from viewers in a head-up display; he notoriously described himself as the "world's first cyborg" to the press, and in 2002 challenged airport security after they allegedly forcibly removed his equipment causing over $50,000 of damage.

[aquote]Mann's initial systems were outlandishly bulky[/aquote]

Mann's initial systems were outlandishly bulky, hampered by the limited battery life and processing power or portable computers of the time, and included such bizarre elements as "rabbit ear" antenna for wireless connectivity. Nonetheless, the researcher persisted, until by the 1990s the eyepiece itself was compact enough to be accommodated in a pair of oversized sunglasses.

In "mediating" the world around him digitally, Mann sought not only to introduce new data into his perspective but reuse and replace elements of the real environment in ways that more suited his needs. If the personalized advertising of Minority Report has become the digital bogeyman of AR naysayers, then Mann's work on so-called Diminished Reality with student James Fung represents mediated reality wresting back the reins. Mann showed how real-world billboards and signs could be overlaid with his own data, such as messages or location-based services alerts, in effect repurposing the visual clutter of adverts into personal screens.

[vms 4964a558e65bdd07ff04]

That research went on to help inspire a separate but similar project, The Artvetiser, which overlaid artwork onto adverts identified by optical recognition. "The Artvertiser software is trained to recognize individual advertisements," project leads Julian Oliver, Damian Stewart and Arturo Castro wrote, "each of which become a virtual 'canvas' on which an artist can exhibit images or video when viewed through the hand-held device. We refer to this as Product Replacement." A version of their app has already been released for the Android platform.

Along the way, however, some of Mann's more interesting discoveries have been around the way others react to his wearable tech, rather than how he himself necessarily experiences it. "Even a very small size optical element, when placed within the open area of an eyeglass lens, looks unusual" Mann writes. "In normal conversation, people tend to look one-another right in the eye, and therefore will notice even the slightest speck of dust on an eyeglass lens."

In fact, he suggests, less attention is drawn when both lenses have the same apparent display assembly in place, even if only one half of it actually works, as the symmetry is less jarring. He also coined the term sousveillance [pdf link], effectively the inverse of surveillance, where "body-borne audiovisual and other sensors [are used for] capture, storage, recall, and processing" of the world around the individual.

[aquote]EyeTap uses a beam-splitter and can be far more discrete[/aquote]

Most recently, Mann has been working on EyeTap, the latest iteration of his wearable display technology. Unlike much of his earlier research, which was released for others to tinker with and adapt, EyeTap has been more aggressively patented amid talk of a commercial release. Where current wearable displays – such as the Lumus panels we saw recently – have been "one-way", adding computer graphics to the field of view but relying on a separate camera for digital input, EyeTap uses a beam-splitter directly ahead of the eye to bounce the incoming light down a fiber-optic path to a so-called aremac ("camera" backwards), and then return it.

This aremac can process the incoming light, modify it and then bounce it back to the beam-splitter to be overlaid on top of the real-world view. The system means the visible components of the display can be far more discrete: in a custom setup crafted by Mann and Rapp Optical, the beam-splitter is a tiny angled block in the center of the right lens, with the fiber-optic path and other components actually making up the frame itself. More bulky components can be hidden either at the back of the neck or in a pocket.

Mann proposed that even the tiny beam-splitter could be further reduced, bringing it down to something along the lines of the etching involved in regular bifocal lenses. However, the EyeTap project has been quiet, at least publicly, since the late 2000s, and there's no sign of the commercial version – which looks more like a Borg eyepiece [pdf link] than the discrete Rapp prototype – shown before.

That leaves others to take the field, and the past few years have seen no shortage of augmented reality concepts of which Project Glass is only the most recent. Nokia's Mixed Reality idea of 2009 was, like the now-lampooned Google video, merely a "what if" render but the pieces are finally slotting into place for products you can actually go out and buy.

[aquote]These aren't tiny brands, we can expect some high-profile wearables[/aquote]

Last month, Lumus confirmed to us that it is supplying wearable eyepieces to several OEMs which plan to launch as early as 2013. These aren't tiny brands off the radar, either; the company wouldn't confirm any names, but told us we could expect some high-profile, readily recognized firms on the roster. Gaming, multimedia and smartphone-style functionality have all been cited as possible use-cases.

Pricing shouldn't be sky-high, too: Lumus doesn't decide the RRP, obviously, but the goalposts have apparently been set at between $200 and $600, depending on the complexity of the wearables, whether they use a separate wired processing pack or run off batteries integrated into the glasses themselves, and if they offer monocular or binocular AR vision. Even Google – not, to be clear, necessarily a Lumus client – is confident it can translate Project Glass from glitzy mockup to real-world device. "It'll happen," project member and Google VP Sebastian Thrun insisted to skeptics, "I promise."

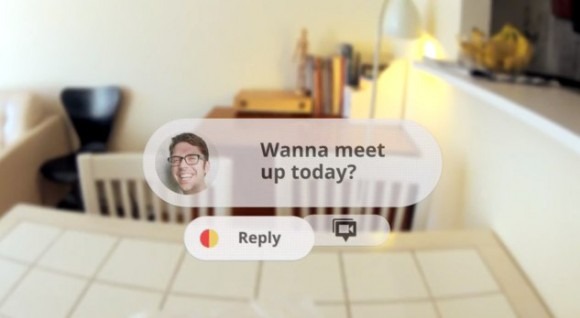

With technology finally catching up to mainstream ambitions, the next challenge is convincing the public that wearables are something they might want to, well, wear. One regular criticism of Project Glass (like other AR systems before it) has been that it will create a world of ever-more insular people, not even having to pull out their smartphones in order to put up a barrier between them and the real-world.

That's a matter of software, largely, but also of ethos. It's notable that Steve Mann's key EyeTap patents express the wearable primarily as a newscasting and sharing system: as something that can create a communal experience divorced from physical proximity:"In wearable embodiments of the invention, a journalist wearing the apparatus becomes, after adaptation, an entity that seeks, without conscious thought or effort, an optimal point of vantage and camera orientation. Moreover, the journalist can easily become part of a human intelligence network, and draw upon the intellectual resources and technical photographic skills of a large community. Because of the journalist's ability to constantly see the world through the apparatus of the invention, which may also function as an image enhancement device, the apparatus behaves as a true extension of the journalist's mind and body, giving rise to a new genre of documentary video. In this way, it functions as a seamless communications medium that uses a reality-based user-interface" EyeTap patent 6,614,408 description

The smartphone has become our gateway into digital social networking: any disconnect between it and the real-world arguably comes about because the method of delivery is inefficient. Google's concept of bouncing icons and pop-up dialog bubbles may not address that entirely, but if the recent avalanche of smartphones and mobile apps is anything to go by, put hardware into developers' hands and – no matter how rudimentary – the software will swiftly progress. A "seamless communications medium that uses a reality-based user-interface" is how Mann describes it: science-fiction becoming science fact.

[Image credits: EyeTap, Steve Mann, Daniel Wagner]