Apple's first ever AI paper is on how to train your AI

Apple isn't exactly a company you'd immediately associate with artificial intelligence research, but of course it does some. After all, it has Siri and some intelligent object recognition in its camera apps. The reason why you don't hear much about it is that Apple has mostly been secretive about its AI R&D, which has been criticized by the AI community for years. That changed when, earlier this month, Apple promised to start publicly publishing its AI research, the first of which has just finally been revealed.

The subject of Apple's first ever public AI research paper revolves around one of the biggest problems in AI, at least next to natural language processing. That is image recognition, which is applied in fields like computer vision, facial recognition, augmented reality applications, and, well, chat bots. To be specific, the paper tackles the problem of how artificial intelligence agents are trained to recognize said images.

There currently two methods in use, representing two extremes of learning methods. One might immediately presume that real world images should be used in teaching computers to recognize images. While that is definitely the ideal, it is also the hardest to get right. Easiest for AI is the use synthetic images, images that have already been prepared, labeled, and annotated for AI learning. The downside of this method is that, when faced with real-world images, the AI gets dumbfounded.

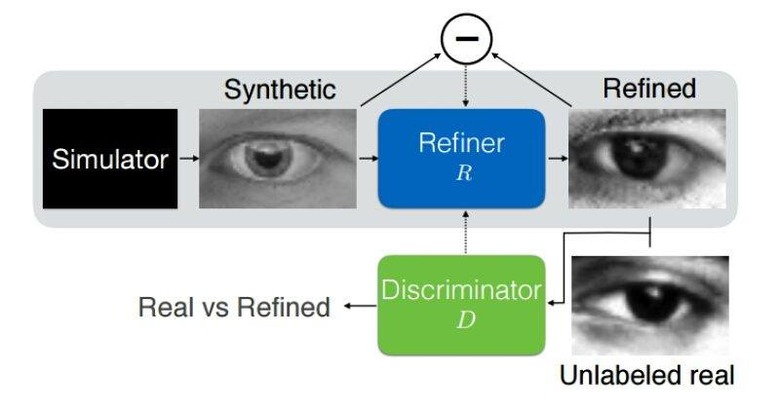

Microsoft's solution is somewhat the middle ground of the two, a modification of a Simulated+Unsupervised (S+U) learning method. It uses an adversarial tactic which, in a nutshell, trains one AI to improve the realism of a synthetic image to the point that another AI can no longer distinguish it from the real thing.

Apple's AI paper might not be groundbreaking, though will definitely be of interest to the AI community, but it represents a significant change in direction for the company. Whether Apple intends to keep that change and for how long remains to be seen and no AI yet exists that can predict that.

SOURCE: Apple