Drivers, not ones and zeroes, the biggest roadblock to autonomous cars

Tesla's Autopilot is in safety regulators' crosshairs after one driver died using the system, but the NHTSA's own research suggests unrealistic expectations and human nature may be the biggest risk to semi-automated cars. The crash, in May 2016, saw Joshua D. Brown, 40, of Canton, Ohio die after his 2015 Model S struck a tractor-trailer crossing the divided highway he was using Autopilot on.

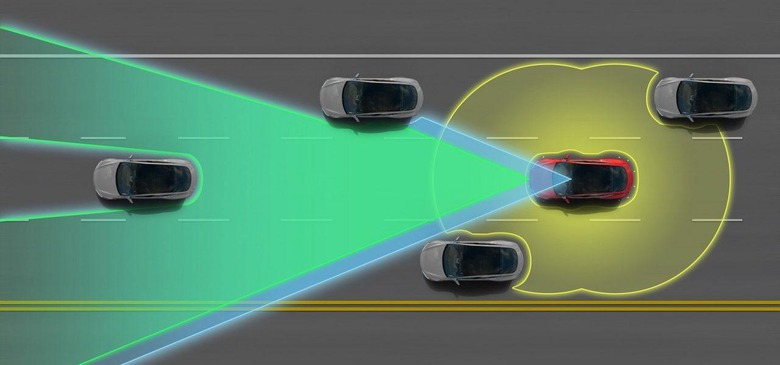

Autopilot may have a name that brings to mind full autonomy, but the functionality is really more akin to an advanced form of adaptive cruise control. Originally limited to high-end luxury sedans, but increasingly available on more mainstream cars, adaptive cruise builds on traditional, fixed-speed cruise control by reacting to traffic around the vehicle.

So, if you're on the highway and vehicles ahead begin to slow, adaptive cruise control will automatically slow your car accordingly. As they speed up, your car will get faster too, until it reaches whatever limit you set.

The capabilities of adaptive cruise systems vary by manufacturer, and according to each automaker's interpretation of safety versus convenience. Typical variations include how slow the system will allow the car to go, and whether it will automatically resume after the vehicle has been stationary.

On top of that are lane-assist and lane-follow systems. Again, these vary in their helpfulness, from merely warning if you start to drift out of the lane, through nudging you back inside the lines if that happens, to systems that can track road markings and attempt to keep you in the middle of the lane.

Just as with adaptive cruise, each manufacturer offering lane guidance has a different take on how it should operate. Some systems step in only at the last moment, ping-ponging you between the lines if left to their own devices; others are smoother and more balanced, taking affect much earlier. The amount of time you can have your hands off the wheel before getting a warning varies, too, as indeed does the consequence of that: whether the system deactivates or even begins to slow the car completely.

Tesla's Autopilot has undoubtedly gone the furthest of any of the production systems in allowing the driver to scale back their active involvement in the driving process. With its combination of radar and cameras, it not only follows prevailing traffic speeds and keeps inside lanes, but allows the driver to remove their hands completely from the wheel for extended periods.

The automaker has been clear from the start that, not only is Autopilot still a beta feature, but that it still demands the attentiveness of whoever is behind the wheel. Indeed, after delivering the original functionality in an over-the-air update to the Model S, Tesla subsequently modified its behavior to require more involvement, in part prompted by videos shared online showing people leaving the driver's seat and other stunts.

If there was a disconnect, it was arguably down to the way Autopilot was hyped by many while still, at its core, being a Level 2 automation system as defined by the NHTSA.

"This level involves automation of at least two primary control functions designed to work in unison to relieve the driver of control of those functions," the NHTSA describes. "An example of combined functions enabling a Level 2 system is adaptive cruise control in combination with lane centering."

A Level 2 system requires the driver be alert to possible hazards and poised to retake all control from the car should a peril occur. That situation certainly tallies with the incident involving Tesla's Autopilot, where the Model S was apparently unable to see the tractor-trailer because of its white color and bright light conditions.

Unfortunately for the driver, they were not in the necessary state of readiness to assume control of the vehicle. It's unclear exactly what was happening in the Tesla – though the driver of the truck involved in the crash told the AP that he believes the Model S driver was watching a Harry Potter movie while in motion, the automaker tells me that you cannot stream video or movies on any Tesla touchscreen – but it's fairly clear that they did not see the accident about to take place.

In a 2015 NHTSA study [pdf link] of Level 2 and Level 3 automated driving concepts – which did not specifically use Tesla cars, since Autopilot was not publicly rolled out until October that year – the safety agency explored some of the issues such systems face in trying to ensure driver attentiveness.

One part of the study tested road monitoring behavior after reminders either every two seconds or every seven seconds. One problem, the researchers identified, is that more frequent prompts had less impact, while less frequent prompts worked but also involved extended periods of inattentiveness that could reduce overall safety.

"The 7-second prompts increased participants' attention to the road after they were presented. This increase was also sustained over time. However, because they were only issued when participants exhibited 7 consecutive seconds of inattention, they were presented only when participants were extremely inattentive to the driving environment. This is reflected in the low monitoring rate observed before the prompts. In contrast, the 2-second prompts were not found to increase participants' attention to the driving environment after they were presented . However, they did lead to the highest amount of attentiveness over the course of the experiment" NHTSA

The NHTSA's study used lane-drift as a hazard example, and discovered that while they reported trusting the Level 2 automation before, during, and after experiencing it, once a lane-drift incident had taken place, "without an alert [they] lost some trust in the automation."

"This suggests that operators may have somewhat calibrated their trust to the capabilities of the automation," the researchers concluded.

Tesla's owners manual for Autopilot is clear that there are many situations that the system cannot handle well, including the possibility that bright light could affect the camera's view. Whenever activated, indeed, Autopilot shows a reminder that drivers ought to "Always keep your hands on the wheel. Be prepared to take over at any time."

Nonetheless, even just a little awareness of human nature suggests that, no matter the warnings, for many drivers these advanced cruise control or piloted-driving systems will be seen as an opportunity to abdicate responsibility to a greater degree than Level 2 technology is capable of dealing with.

That may or may not be the fault of the technology itself, but it is a fact of life that all automakers will need to contend with as cars get closer to – but not quite reach – full autonomy.

In the meantime, I suspect investigations like the NHTSA's into whether Autopilot did what was expected of it are likely to become more frequent, and it's unclear whether self-driving vehicles will arrive before safety regulators feel the need to weigh in with a heavier hand to clamp down on public deployments of cutting edge systems.